As you might have noticed, a few months ago Codit Belgium moved to a new brand office in Zuiderpoort near the center of Ghent.

One of the center pieces, and my favorite, is our Codit Wall of Employees:

For these new offices Codit had a need for a visitor system that allows external people to check-in, notify employees that their visitor arrived, etc. The biggest requirement was the ability to list all the external people currently in the office for scenarios such as when there is a fire.

That’s how Alfred came to life, our personal butler that assists you when you arrive in our office.

Thanks to our cloudy visitor platform in Microsoft Azure, codenamed Santiago, Alfred is able to assist our visitors but also provide reporting on whom is in the building, sending notifications, etc.

We started off with our very own Codit Hackaton – Dedicated teams were working features and got introduced to new technologies and more experienced colleagues were teaching others how to achieve their goal.

Our #hackathon has begun. Let’s create an amazing #app for the new #offices in #Ghent. 6 hours to go! #cloud #Azure pic.twitter.com/IVIFS63DRT

— Codit (@CoditCompany) November 3, 2016

Every Good Backend Needs A Good Frontend

For Alfred, we chose to use a Universal Windows Platform (UWP) app that is easy to use for our visitors. To avoid that people are messing with our Surface we are even running it in Kiosk-mode.

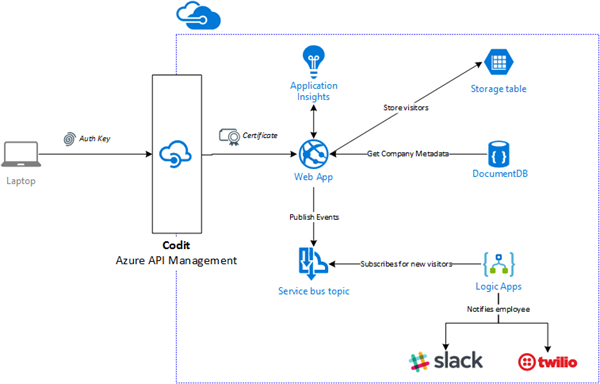

Behind the scenes, Alfred just communicates with our backend via our internal API catalog served by Azure API Management (APIM going forward).

This makes sure that Alfred can easily authenticate via a subscription key towards Azure API Management where after Azure APIM just forwards the request to our physical API by authenticating with a certificate. This allows us to fully protect our physical API while consumers can still easily authenticate with Azure APIM.

The API is the façade to our “platform” that allows visitors to check-in and check-out, send notifications upon check-in, provide a list of all offices and employees, etc. It is hosted as a Web App sharing the same App Service Plan on which our Lunch Order website is running to optimize costs.

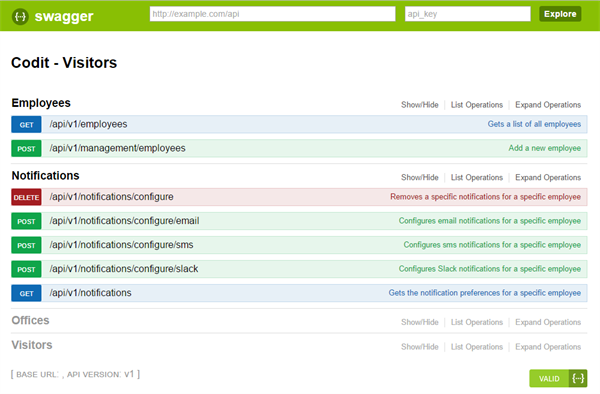

We are using Swagger to document the API for a couple of reasons:

- It is crucial that we provide a self-explanatory API that enables developers to see what the API offers at a glance and what to expect. As of today, only Alfred is using it but if a colleague wants to build a new product on top of the API or needs to change the platform, everything should be clear.

- Using Swagger enables us to make the integration with Azure API Management easier as we can create Products by importing the Swagger.

Storing Company Metadata in Azure Document DB

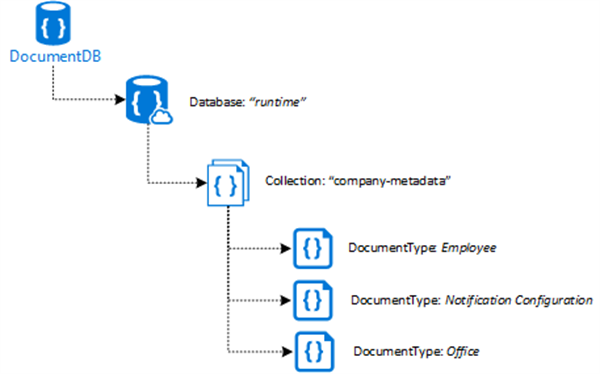

The information about the company is provided by Azure Document DB where we use a variety of documents that describe what offices we have, whom is working at Codit, what their preferred notification configuration is, etc.

We are using a simple structure where each type of information that we store has a dedicated document of a specific type that we link to each other grouped in one collection. By using only one collection we can group all the relevant company metadata in one place and save costs since Azure bills for RUs per collection.

As an example, we currently have an Employee-document for myself where we have a dedicated Notification Configuration-document that describes the notification I’ve configured. If I were to have notifications configured for both Slack and SMS messages, that means there will be two documents stored.

This allows us to easily remove and add documents for each configured notification configuration for a specific employee of using one document dedicated per employee and updating specific sections which makes it more cumbersome.

As of today, this is all static information but in the future, we will provide a synchronization process between Azure Document DB and our Azure AD. This will remove the burden of keeping our metadata up-to-date so that when somebody joins or leaves Codit we don’t have to manually update it.

Housekeeping For Our Visitors

For each new visitor that arrives we want to make their stay as comfortable as possible. To achieve this, we do some basic housekeeping now, but plan to extend this in the future.

Nowadays when a visitor is registered we keep persisting an entry in Azure Table Storage for that day & visitor so that our reporting knows whom entered our office. After that we track a custom event in Azure Application Insights with some context about the visit and publish the event on an Azure Service Bus Topic. This allows us to be very flexible in how we process such an event and if somebody wants to extend the current setup they can just add a new subscription on the topic.

Currently we handle each new visitor with a Logic App that will fetch the notification configuration for the employee he has a meeting with and notify him on all the configured ways we support; that can be SMS, email and/or Slack.

Managing The Platform

For every software product, it comes without saying that it should also be easy to maintain and operate the platform once it is running. To achieve this, we use a combination of Azure Application Insights, Azure Monitor and Logic Apps.

Our platform telemetry is being handled by Azure Application Insights where we send specific traces, track requests, measure dependencies and log exceptions, if any. This enable us to have one central technical dashboard to operate the platform where we can use Analytics-feature to dive deeper into issues. In the future we will even add Release Annotations to our release pipeline to easily detect performance impact on our system.

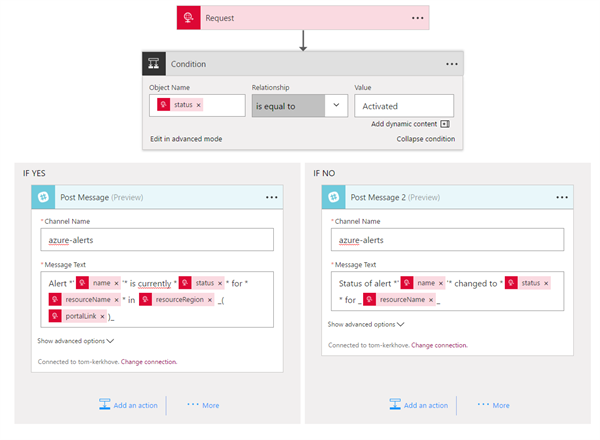

Each resources has a certain set of Azure Alerts configured in Azure Monitor that will trigger a webhook that is hosted by an Azure Logic App instance. This consolidates all the event handling in one central place and provides us with the flexibility to handle them how we want, without having to change each alert’s configuration.

Securing what matters

At Codit; building secure solutions is one of our biggest priorities, if not the biggest. To achieve this, we are using Azure Key Vault to store all our authentication keys such as Document DB key, Service Bus keys, etc. so that only the people and applications can access them while keeping track of when and how frequent they access them.

Each secret is automatically being regenerated by using Azure Automation where every day we will create new keys and store the new key in the secret. By doing this the platform will always use the latest version and leaked information becomes invalid allowing us to reduce the risk.

One might say that this platform is not considered a risk for leaking information but we’ve applied this pattern because in the end, we store personal information about our employees and it is a good practice to be as secure as possible. Applying this approach takes a minimal effort, certainly if you do this early in the project.

Security is very important, make sure you think about it and secure what matters.

Shipping With Confidence

Although Alfred & Santiago are developed as a side-project, it is still important that everything we build is production ready and have confidence that everything is still working fine. To achieve this, we are using Visual Studio Team Services (VSTS) that hosts our Git repository. People can come in, work on features they like and create a pull request once they are ready. Each pull request will be reviewed by at least one person and automatically built by VSTS to make that it builds and no tests are broken. Once everything is ready to go out the door we can easily deploy to our environments by using release pipelines.

This makes it easier for new colleagues to contribute and providing an easy way to deploy new features without having to perform manual steps.

This Is Only The Beginning

A team of colleagues were willing to spend some spare time to learn from each other, challenge each other and have constructive discussions to dig deeper into our thinking. And that’s what lead to our first working version, ready as a foundation and to which we can start adding new features, try new things and make Alfred more intelligent.

Besides having a visitor system that is up and running, we also have a platform available where people can consume the data to play around with, to test certain scenarios with representable data. This is great if you ask me because then you don’t need to worry about the demo data, just focus on the scenario!

To summarize, this is our current architecture but I’m sure that it is not final.

Personally, I think that a lot of cloud projects, if not all, will never be “done” but instead we should be looking for trends, telling us how we can improve to optimize it and keep on continuously improve the platform.

Don’t worry about admitting your decision was not the best one – Learn, adapt, share.

Thanks for reading,

Tom Kerkhove

Subscribe to our RSS feed