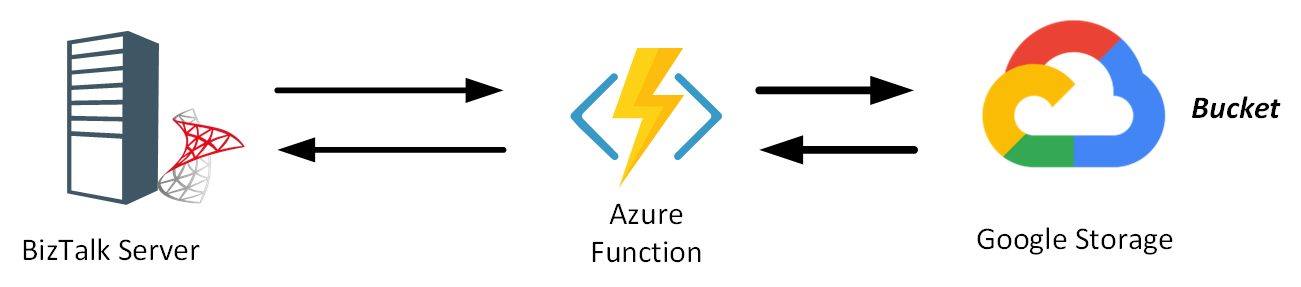

In our daily job, we face all kinds of integration challenges. Recently, we had to build a function to send messages to Google Storage – to be specific a Bucket, an equivalent of a Storage container in Azure. Messages originated from a BizTalk Server instance communicating with other on-premise systems.

The Google Storage belongs to a third party we communicate. Having some experience and knowledge of the Google Cloud, we started our journey. In the blog, we will discuss the part from Azure Function interacting with a Google Storage service.

Google Storage

Google Storage is a service on the Google Cloud Platform (GCP) infrastructure allowing users to store and access data. Through the Google Cloud Console, we can access any Google Cloud services.

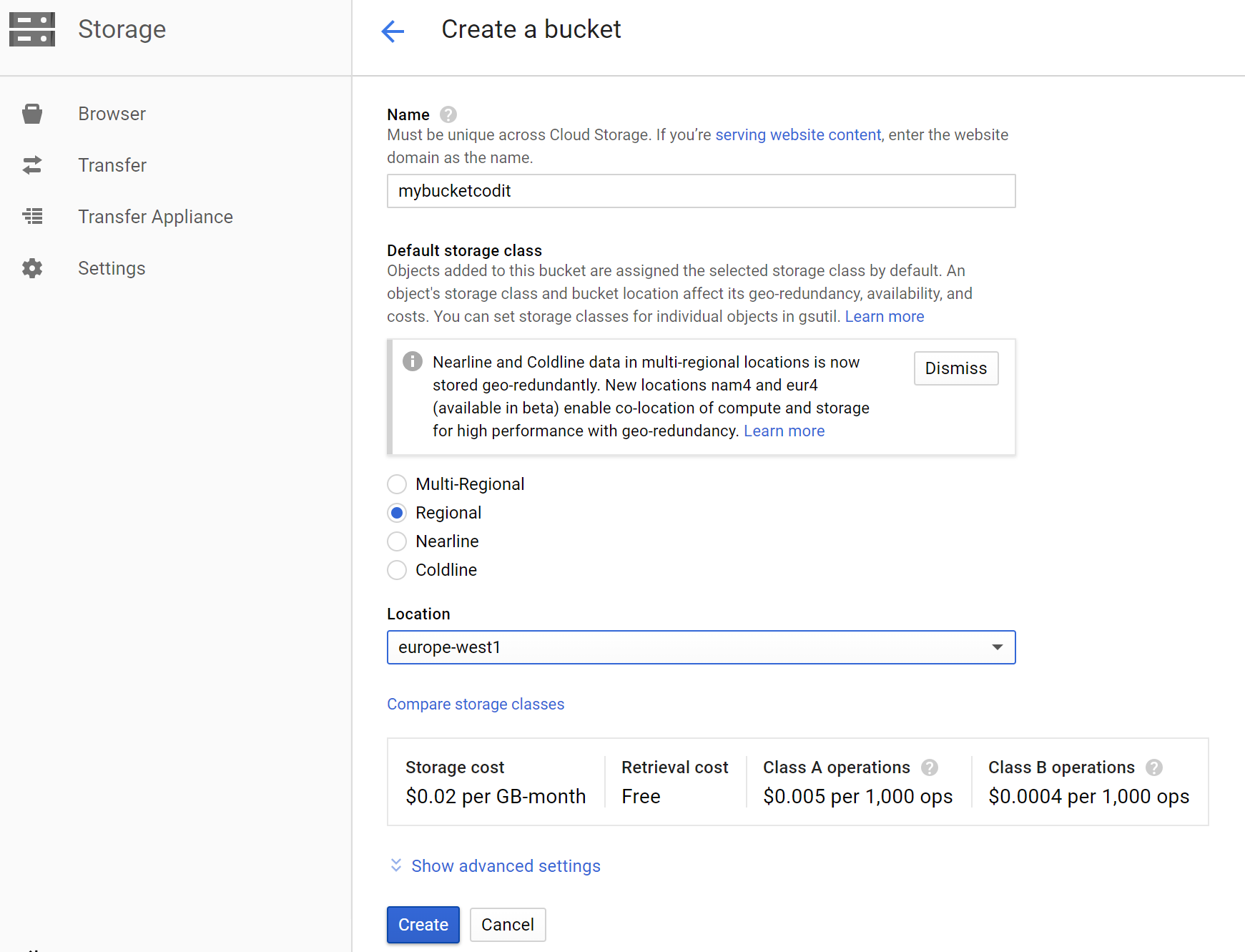

Within the Storage, we can create a so-called Bucket, similar to a container in Azure Storage. A bucket has three properties that you specify when you create it: a globally unique name, a location where the bucket and its contents are stored, and a default storage class for objects added to the bucket. A storage class offers four storage classes: Multi-Regional Storage, Regional Storage, Nearline Storage, and Coldline Storage. With a storage class, you specify if you want your bucket to be geo-redundant, highly available, and accessible. The storage concept is similar to the replication capability in Storage (RA-GRS, GRS, LRS, and ZRS) and access tier (hot, cold).

The next step is setting up a service account in the GCP to enable you to access the bucket.

Google IAM

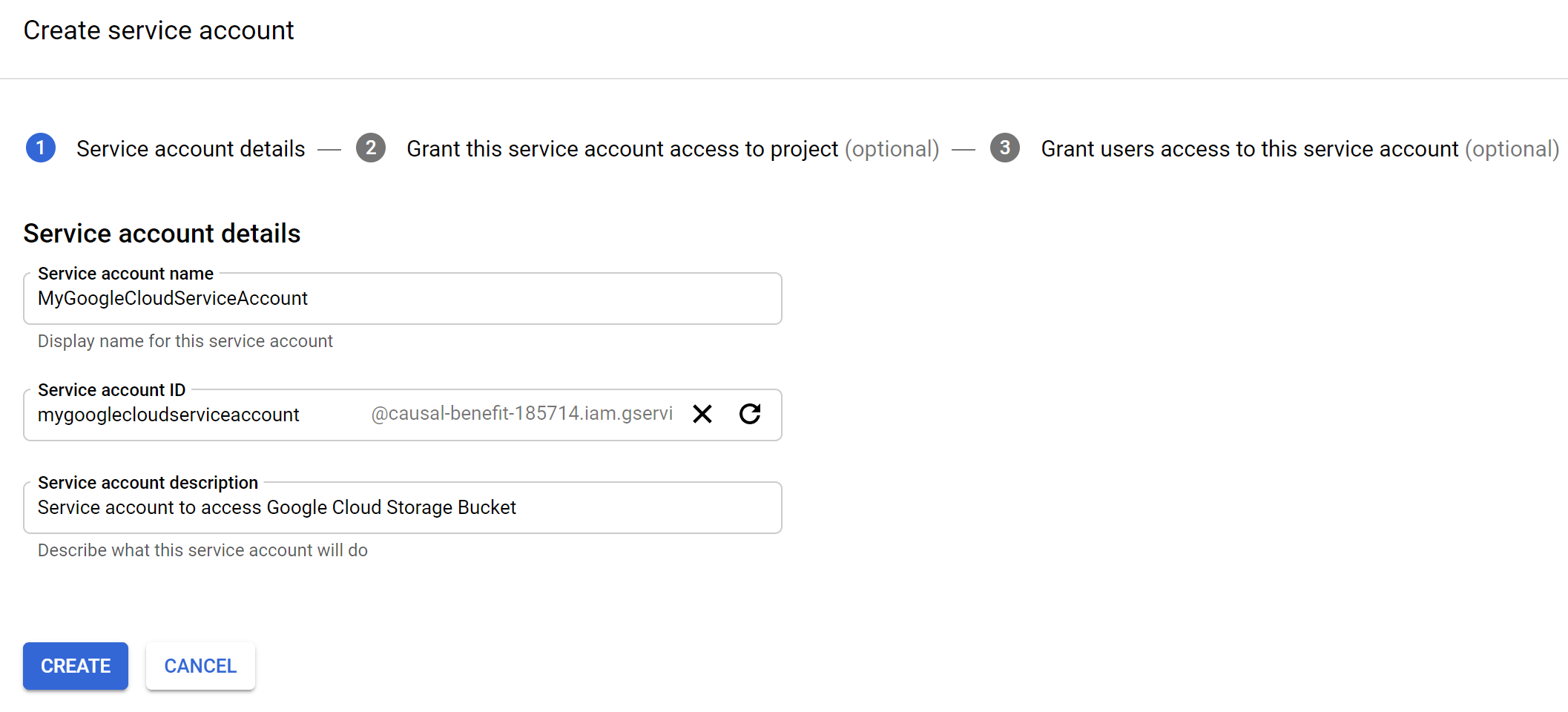

With the Google Cloud Console, you can access the Google Cloud Identity & Access Management (IAM) service on the GCP. This service gives admins fine-grained access control and visibility for centrally managing enterprise cloud resources. Through IAM you can create a service account granting access to your storage bucket. Moreover, you need this account within the Azure Function to send messages to the bucket.

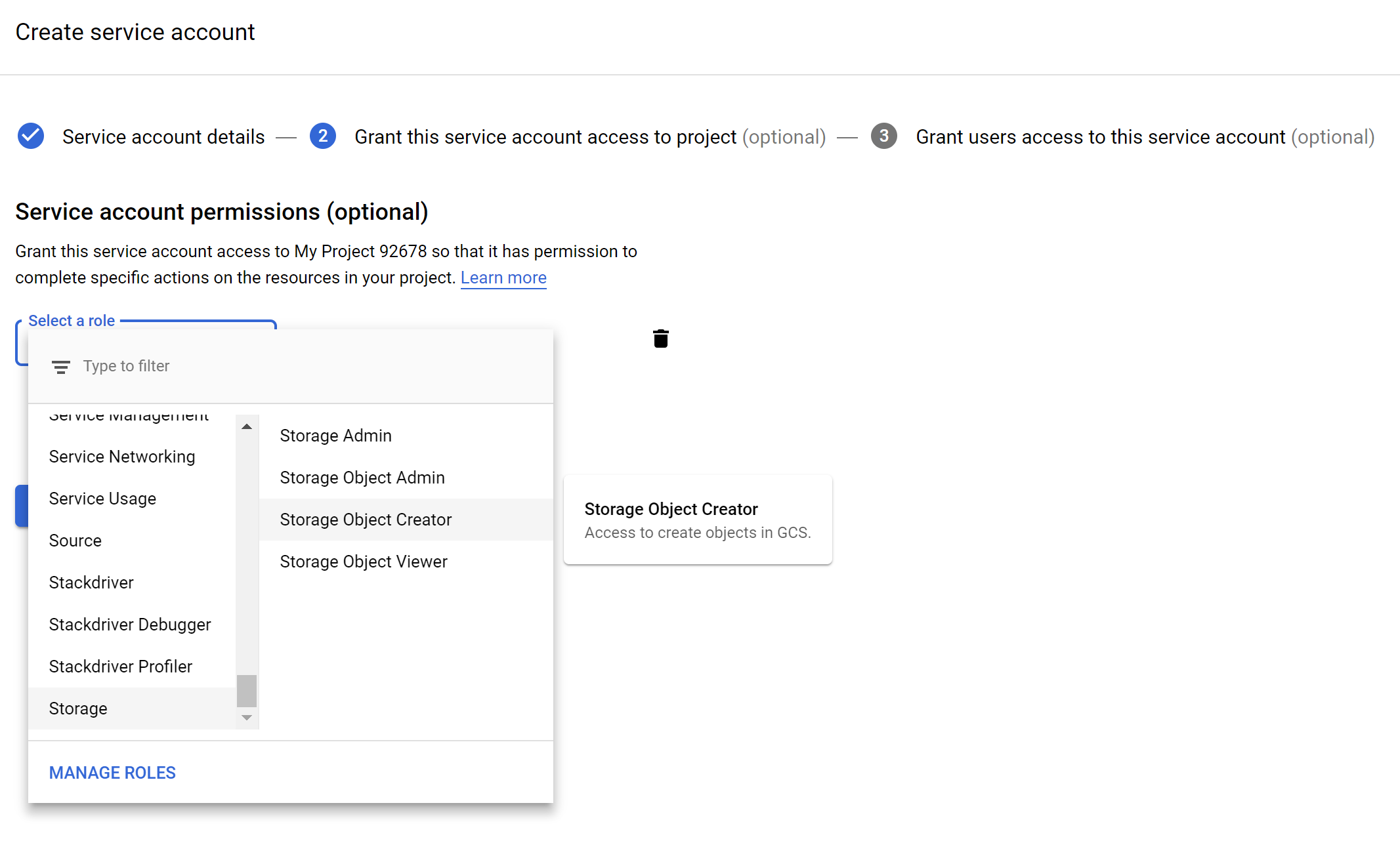

You create a service account, provide a name for it, and a description. The service will create the service account ID for you. Next, you click to create and set the service account permissions – similar to policies on an Azure Storage container.

Optionally you can grant users to the service account. However, this is not necessary for our function. The next step is to create a key, essential for security (credentials). This key can Json or P12, and we choose Json.

{

“type”: “service_account”,

“project_id”: “causal-benefit-185714”,

“private_key_id”: “b23c41b7ff7fd2dd12d29292a5d7622101a1de31”,

“private_key”: “—–BEGIN PRIVATE KEY—–\nMIIEvAIBADANBgkqhkiG9w0BAQEFAASCBKYwggSiAgEAAoIBAQDSONJ5ZfmhV0sL\nr2//qJKHI0v1mjp14wqAuk/IkqK/aL+z9FBAMFf2HbdYWoyEypBt4r6k6jvz+hWY\nlJUgnWG2eWV3yftWYrgVpEngrPVl9zCNfi2W+zFbt38GaQhpRNh2uA6g1/w7EsEq\nTrOooZTWpF6JESsY2vH0Eo+RGHQP3arRbkUkfaLayBD4ExxYzineJA3a+xqsBw7Y\n82362vZdH3fGlr1PuVptQ6bMixZ3NXuAhzoO83LHe8sssC76CsgaGVk7Jaen62LF\nMsO3f7OoLpQnROHtUsjt4dgNgQ7xNgCLUDikwmM+0XbH7h4cj1xOWNDocpb6JmCJ\nnqMDeGoLAgMBAAECggEAOIuIMZc4Wil8yhvaaE4Te74GxH1DlEoJnS6AH1cx54Jp\nbiIdI+bdAhpkIqPYgC9sQeJnnTxT/AAcpvnwUuGCUu68WEhADrBnC4sxQ/nB2Ddx\nG94ArUfLsvvccwD1fjtCbkx7ws/VzJ3yz0p0ELvFqSZ1NzEjAoCB7EDB1SlarplY\nBi8Kgo63WDn5rnS6Eh3uaMHV9e6104BBJ8c//0VLsizdG0jwsziivsvWFtA+tTUJ\nQveEbkAPUUuqGZizxoCztJ6JTXaWEbjORt8yWfQC26jEO4FEh7AEidBFnGjUzJ1L\nDivjpxtYOx22e9q0wYaC326p2+3JkXrULsx7/rEK4QKBgQD8dBF/6HL+6K9sSBUO\n2w98TorgDYY2FSAcmNrHFn4YCLckTjwJ1LtTdTW2tPBgw6jg9mP5YQZHXhs2I/6E\n50d2apY3vTs7o7+/A/4aFpTFSaY6YFGMaKuKI4BRtpSFE1w+FKy3Ub9vEcsafKq0\n77+jeAJeNY9EbvErN6+3nAV8NwKBgQDVLN8ODjDzqOJrDngIZWfEwfVTy+Did1RR\nsNeJdlKaBjYH86X8NL4ojYoBBA1qDEFmbvDIHkOkVYUF+HJE1cMc3PazaZYGWvjt\neY9haCFKgPwuup0g/dHhLpLK/ceEnhid/bY91sXadvHGDVKfFCJRwiiJ5Zrr2jQj\ndoUZIeaezQKBgE3Q1SgRFYk/XftJiLwoh/BwIVyIrqry/g/yidU+OKXd4d3eA6Gg\nIhHKmkD0KvgYt3CIYi6XWqEbAEygca6zv5JfrmgF+0EZ61vMtkGCXl8loYhy8hAO\nn3mYEdCeL8+JNTCpnMdw+koZOPq0HMZi9DZGIqy5Y6zbaZlBs/crr4EnAoGAceyZ\nCBntb0pCNpR08Ye//Rbq1O2QMXc0SLQJfB0P5+CJ35YGjtJhDasWpZRU1ufVy7he\nVZRW8ewCOz6bUs4qh7JO5XL4Ck1z2vWr+pJ7uCVWoGJ6trbvAziwmmslxWn4eNZuXb3nrBgB0PrU2Y/4/Ig6Qqj8o/Ucan8ZcECgYAFv7boNrMDGbgLQM83l3Yo\nhDsWsQX5Onw/Esv46OCjwYyeU8GdfB6/Do1L6ToEY9h05eHNLve9g5xdK1ewOOSY\n9pGucXeVVyARXI05zmBIcaBz1JGQPSBSePLY9l4j/gdfIYPryfHS3wh1Yy7/dpw9\nOss22al4MThWfgSd1ol9Ew==\n—–END PRIVATE KEY—–\n”,

“client_email”: “mygooglecloudserviceaccount@causal-benefit-185714.iam.gserviceaccount.com”,

“client_id”: “116821195121769834733”,

“auth_uri”: “https://accounts.google.com/o/oauth2/auth”,

“token_uri”: “https://oauth2.googleapis.com/token”,

“auth_provider_x509_cert_url”: “https://www.googleapis.com/oauth2/v1/certs”,

“client_x509_cert_url”: “https://www.googleapis.com/robot/v1/metadata/x509/mygooglecloudserviceaccount%40causal-benefit-185714.iam.gserviceaccount.com”

}

Once you create a key, you will have it on your machine, and you need to keep this safe somewhere.

Azure Function

With the storage bucket and service account in place, we can start developing our Azure Function. You can build an Azure Function in a browser (Azure Portal) or with an IDE such as Visual Code or Studio. In case you are creating a production-ready function, it is recommended you use an IDE for the benefit of debugging and productivity. Furthermore, you can push your code later to Azure DevOps and deploy your function in UAT and production environments. The in-browser option for creating functions is best suited for experimentation or a POC.

With Visual Studio 2017 version 15.5 you can create Azure Functions V1 (.NET) and V2 (.NET Core). You select the Azure Function template, choose the version, and trigger (binding). In our integration scenario with Google, we choose version 2, HTTP Trigger. Next, we selected the following NuGet packages for our function:

- Apis.Core 1.36.1

- Cloud.Storage.V1 2.3.0-beta04

- Azure.KeyVault 2.3.2

- Azure.Services.AppAuthentication 1.2.preview

- NET.Sdk.Functions 1.0.13

Note that the versions of the NuGet packages are essential for compatibility. It can be a struggle to find the correct ones collaborating nicely together. During the development of the function we encounter a few mscorlib errors, and it was a challenge to find the solution online.

With the listed NuGet packages and the code below we were able to send messages to a Google Storage Bucket.

public static class HttpsGoogleBucket

{

[FunctionName(“HttpsGoogleBucket”)]

public static async Task<IActionResult> RunAsync([HttpTrigger(AuthorizationLevel.Function, “get”, “post”, Route = null)]HttpRequest req, TraceWriter log, ExecutionContext context)

{

var data = new StreamReader(req.Body).ReadToEnd();

log.Info(data);

//To Stream Object

var byteArray = Encoding.UTF8.GetBytes(data);

var stream = new MemoryStream(byteArray);

try

{

var azureServiceTokenProvider = new AzureServiceTokenProvider();

var keyVaultClient = new KeyVaultClient(

new KeyVaultClient.AuthenticationCallback(

azureServiceTokenProvider.KeyVaultTokenCallback));

var googleProfile = (await keyVaultClient.GetSecretAsync(Environment.GetEnvironmentVariable(“SecretIdGoogleProfile”))).Value;

var credentials = GoogleCredential.FromJson(googleProfile);

var client = StorageClient.Create(credentials);

log.Info(“Connection to Google Storage succeeded!”);

var bucketName = GetEnvironmentVariable(“GOOGLE_BUCKET_NAME”);

var googleResponse = await client.UploadObjectAsync(bucketName, Guid.NewGuid().ToString(),

“application/json”, stream, null, CancellationToken.None);

log.Info(JsonConvert.SerializeObject(googleResponse));

return new ObjectResult(googleResponse);

}

catch (Exception e)

{

return new ObjectResult(e.Message);

}

}

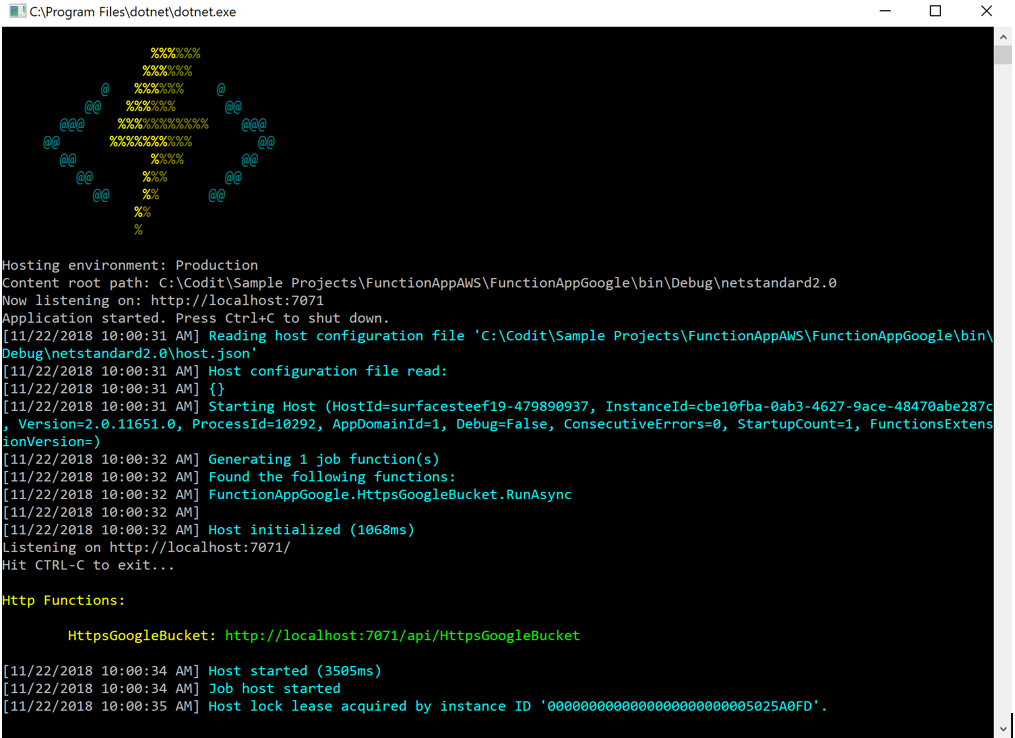

When running the function locally, an endpoint like shown below will be available.

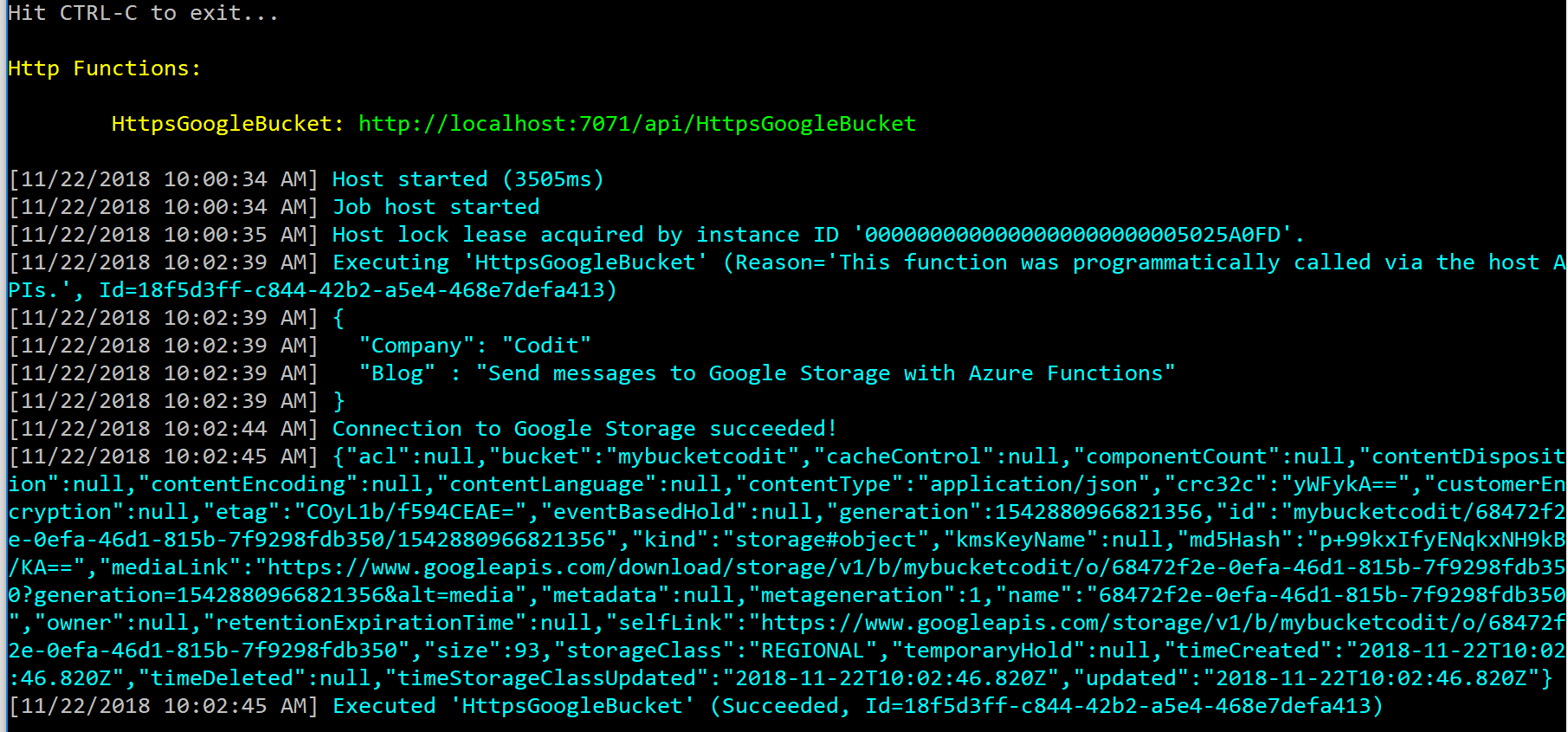

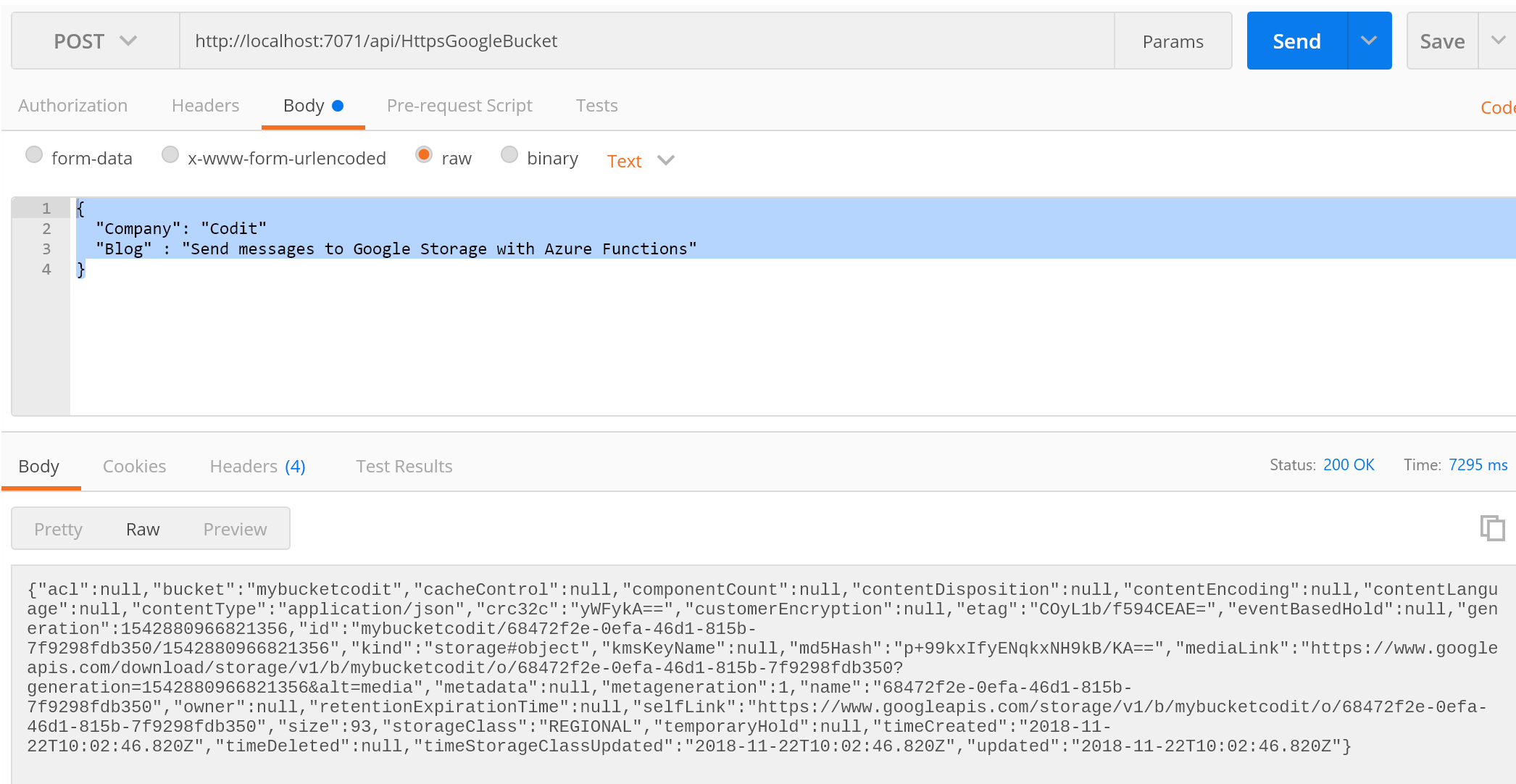

With Postman we can send a message to the endpoint. When we send the following payload to the endpoint:

{

“Company”: “Codit”

“Blog”: “Send messages to Google Storage with Azure Functions.”

}

We observe the following in the console window:

And in Postman we can see the same result:

In our Google Bucket, we can also access the message. In this case, we have not set any policy or restriction on viewing the message – it is publically accessible.

How it works

Let’s explain how the code works. The communication with Google Storage through a .NET Core Azure Function (V2) is possible leveraging the Google.Apis.Core 1.36.1 and Google.Cloud.Storage.V1 2.3.0-beta04 NuGet packages. The first NuGet package is necessary for authentication and authorization with any Google Cloud service. By providing a so-called google profile, the earlier discussed key we have our credentials. These credentials we pass to a Storage client, connect to a storage client, available through the second NuGet package.

var credentials = GoogleCredential.FromJson(googleProfile);

var client = StorageClient.Create(credentials);

We get the profile from Azure KeyVault since we are dealing with credentials. The json key is stored as a secret in Azure KeyVault.

var azureServiceTokenProvider = new AzureServiceTokenProvider();

var keyVaultClient = new KeyVaultClient(

new KeyVaultClient.AuthenticationCallback(

azureServiceTokenProvider.KeyVaultTokenCallback));

var googleProfile = (await keyVaultClient.GetSecretAsync(Environment.GetEnvironmentVariable(“SecretIdGoogleProfile”))).Value;

The SecretIdGoogleProfile is obtained through the local.settings.json file and the same accounts for the Bucket name.

{

“IsEncrypted”: false,

“Values”: {

“AzureWebJobsStorage”: “UseDevelopmentStorage=true”,

“AzureWebJobsDashboard”: “UseDevelopmentStorage=true”,

“SecretIdGoogleProfile”: “https://mykeyvaultinstance.vault.azure.net/secrets/GoogleProfile/bcc4b799a17540839f756cfaaa83f861”,

“GOOGLE_BUCKET_NAME”: “mybucketcodit”

}

}

There are various ways to obtain a secret from KeyVault – I predominantly used the Getting Key Vault Secrets in Azure Functions post from Jeff Hollan for guidance. The final step is uploading (sending) the message to a Google Bucket.

var bucketName = GetEnvironmentVariable(“GOOGLE_BUCKET_NAME”);

var googleResponse = await client.UploadObjectAsync(bucketName, Guid.NewGuid().ToString(),”application/json”, stream, null, CancellationToken.None);

The of the upload action is returned to the caller of the function. In case an error occurs then the function will return the error.

Next steps

The next steps once you create such a function with a Google service is to build and deploy the function in Azure UAT and Production environment. You push the code to a repro in Azure DevOps, and create a build, and release pipelines. This process will be explained in one of the upcoming blog posts.

Cheers,

Steef-Jan

Subscribe to our RSS feed