Opening Keynote by Jon Fancey, Microsoft and Neil Webb Solution Architect

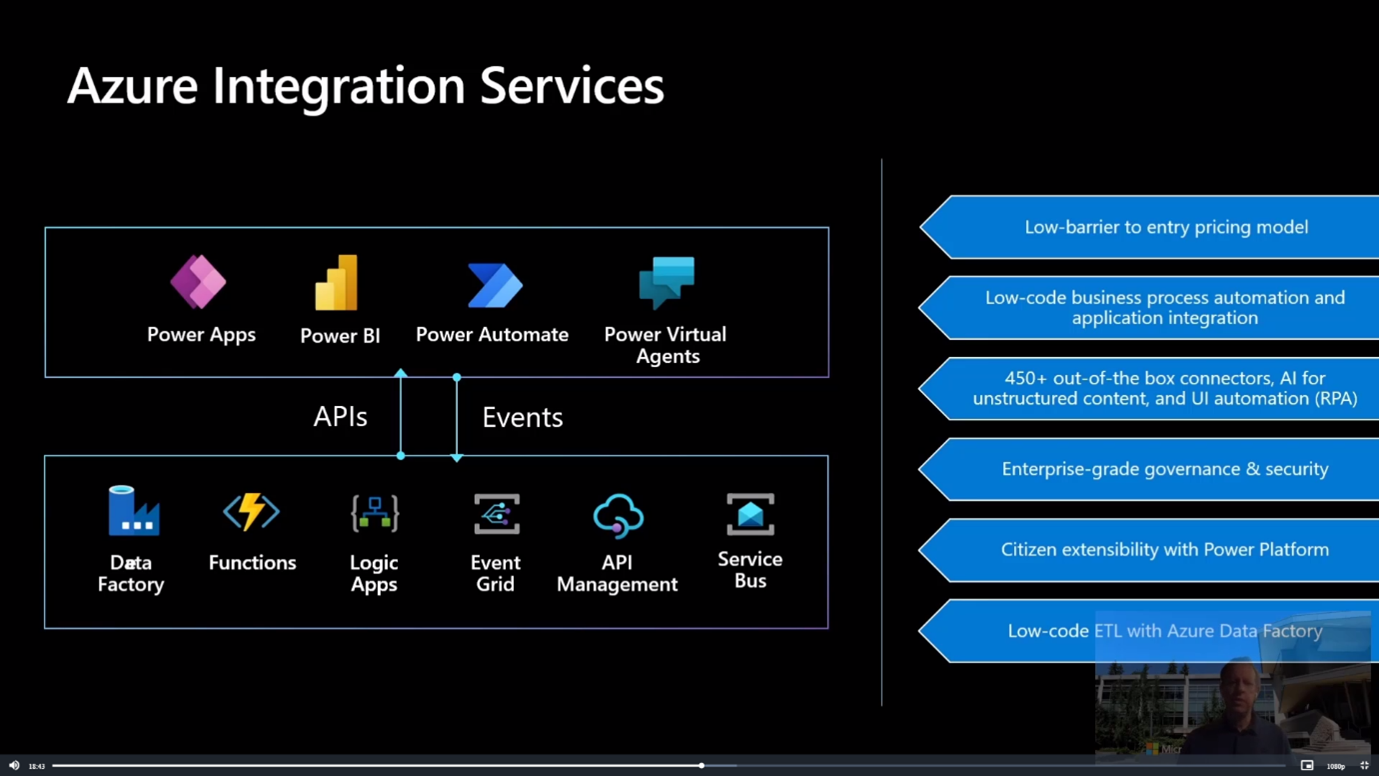

Azure Integration Services

Jon starts his talk about Microsoft’s vision and end goal which is to help everyone to be or become a better integrator. Goal for Azure is to support integrations more and more. The focus lies on:

- Event Grid: Event raising and delivery

- Logic Apps: Microsoft Services and API

- Api Management: Access control, analysis and overall management of Api’s

- AppService: Websites

- Functions: Serverless computing

With the power platform these underlying technologies become available for as many people as possible.

Logic Apps: Microsoft Services and API

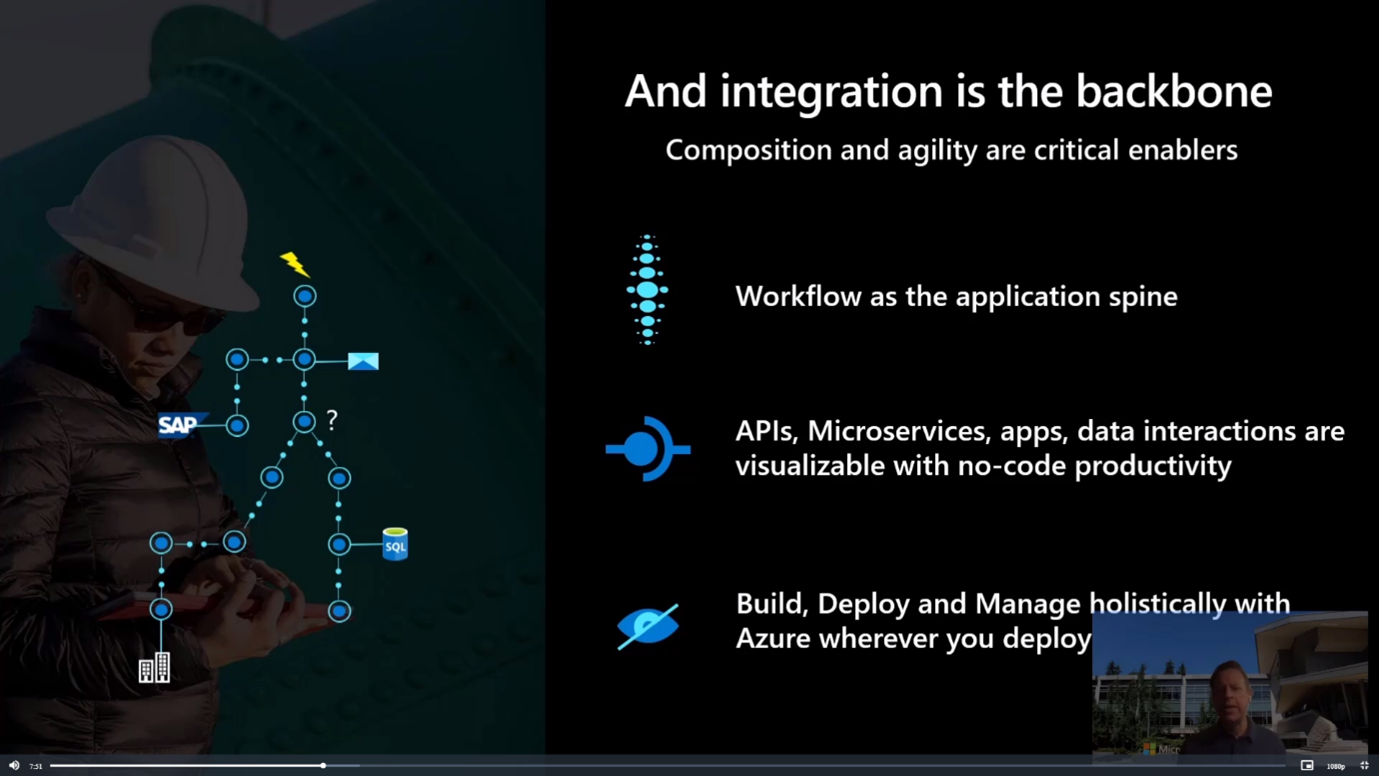

Microsoft has been working on a new Logic App platform. In their view combined microservices are like workflows. Calling different API’s and pulling it all together. Integration is the backbone of this transformation and the spine of these workflows.

The Logic App platform has been improved to support this view.

Features of the new Logic App platform include:

- A new user interface

- Application insights support

- Scaling

- Visual Studio Code support with WYSIWYG editor

- Logic Apps can be run and tested locally. Including breakpoints.

- Logic Apps can be run on an App Service Plan

- Logic Apps can be run On Premise with Azure Arc and a Kubernetes cluster. This can be combined with monitoring in Azure via Application Insights.

- Logic Apps can be deployed without ARM templates. Instead using GIT-hub actions written in YAML.

- Event raising and delivery: Event Grid

- Access control, analysis, and overall management of Api’s: Api Management

Step by step the Logic App platform is becoming more mature. It shows that the Logic App platform is here to stay and that we can expect even more improvements in the future.

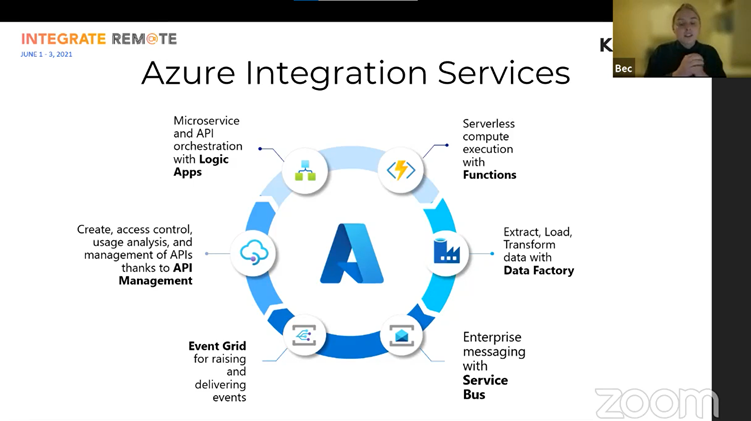

Azure Integration Services Realized

After the Keynote, Bec Lyons (Program Manager – Logic Apps) called in from Australia to talk about end to end patterns and practices when using Azure Integration Services.

She kicked off the first part of her session with a recap of the Azure Integration Services package. Bec talked about the strenghts of every component and how they are easily chained together. So, you can decide on what technology to use that best fits your particular workload.

A modern integration solution is built with some recurring key components where AIS has a technology to meet these specific needs.

- API Management – for exposing and securing your API’s

- Event Grid – for your event-based integration flows

- Service Bus – for the enterprise messaging scenarios

- Logic Apps – workflow and orchestration

- Data Factory – for your ETL workloads

- Functions – for serverless code execution

In the second part she recapped the newly released features of Azure Application Services with Arc enabled Kubernetes clusters. Because this was already touched during the keynote, she kept this quite short. But the key takeaway is that, with the new runtime, you can now “code once, deploy and run anywhere”. If you want to know more about this, read our blog Running Azure PaaS anywhere using Azure application services with Azure Arc.

Bec then showcased how to build these solutions end-to-end by showing a demo of how a modern digital integration hub would be developed with the new Azure Logic Apps runtime and Azure Functions as the integration layer.

All from within VS Code she created Functions and a Logic App using the new designer extension. She then triggered some (local) integration testing before pushing the changes to her code repository on Github. That triggered a build/release pipeline that deployed these resources to Azure AND to an Arc-enabled Kubernetes cluster running in her own test-lab.

The new developing and testing experience looks very promising and could lift some of the painpoints involved to building integration solutions on the consumption runtime today.

The Digital Integration Hub sample is available on GitHub if you want to try this out yourself

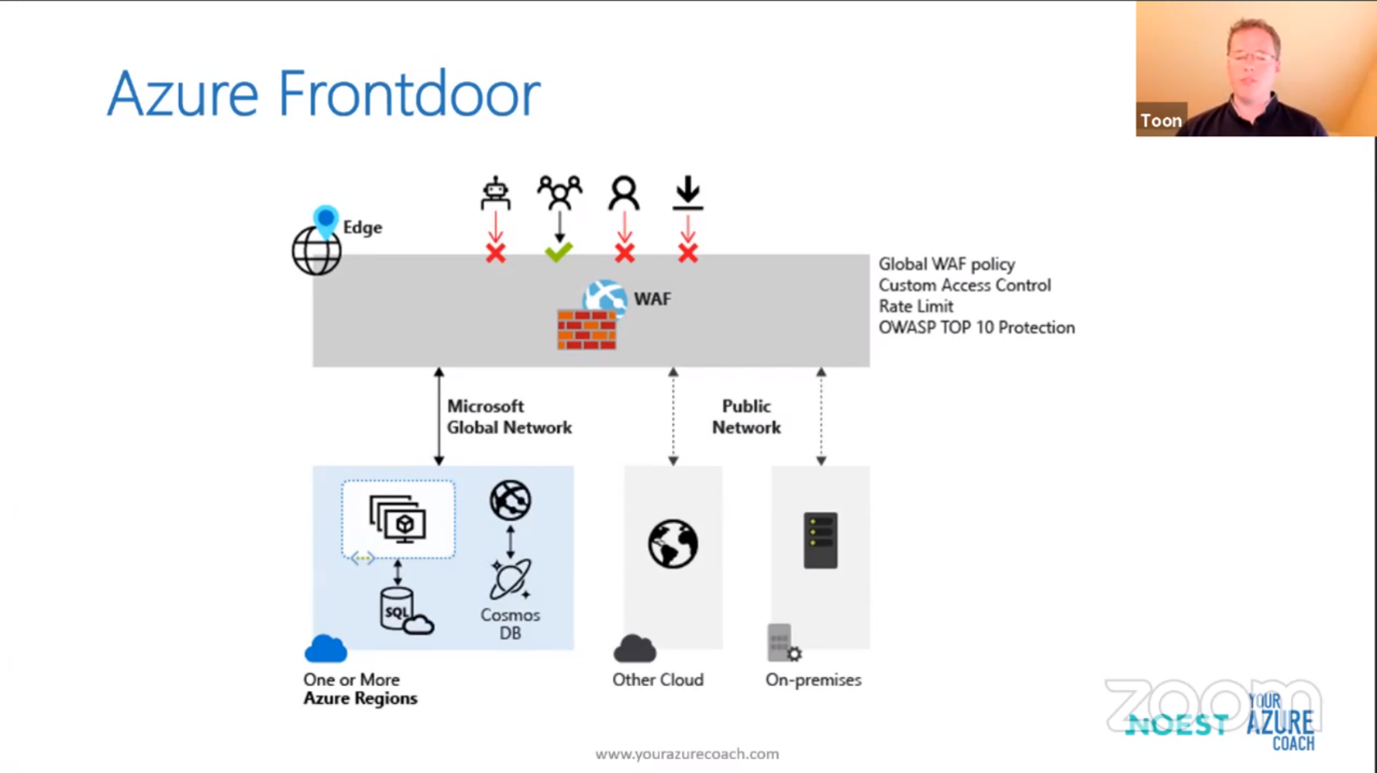

Secure your API program with Azure API Management

This session was presented by Toon Vanhoutte. Using a number of demos he showed how to secure your APIs.

In his first demo he built a simple API without any security, that just mocked a response.

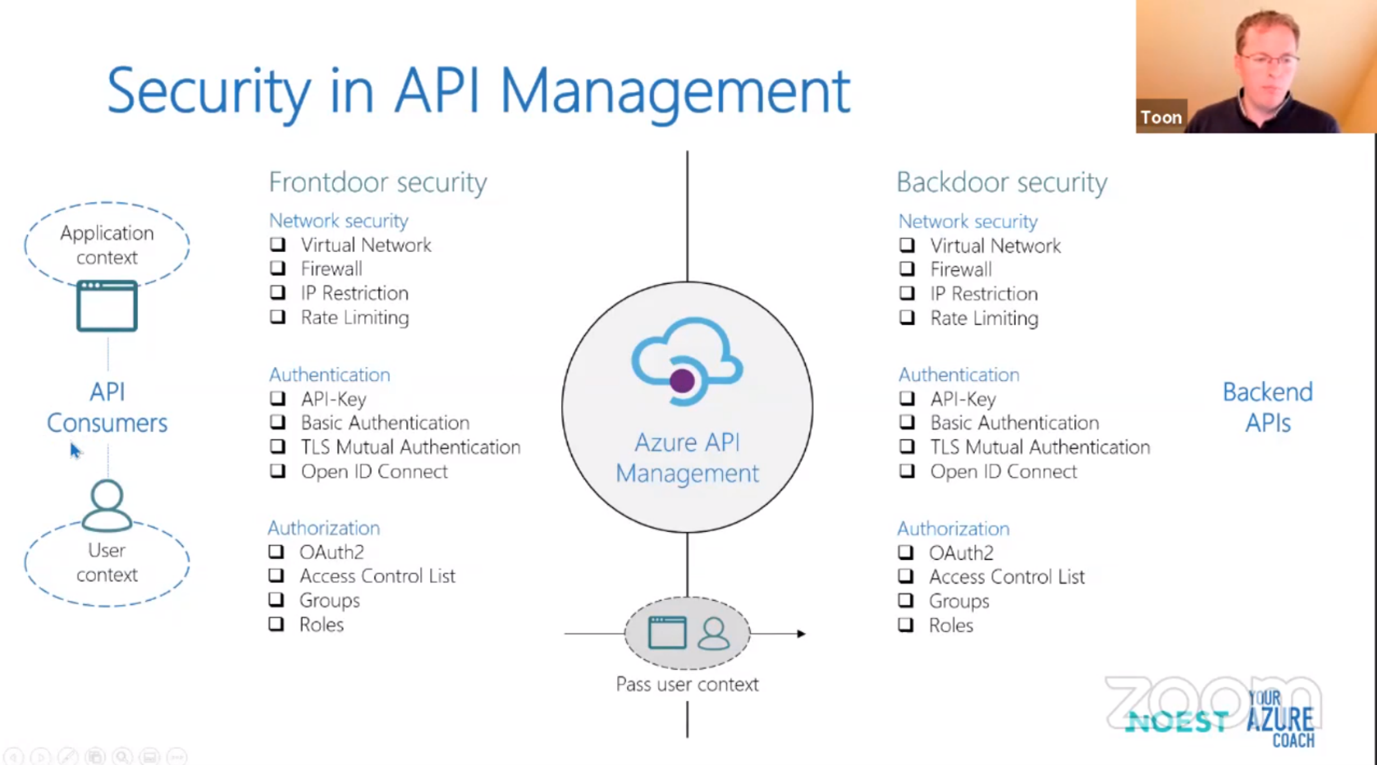

Then he talked about Frontdoor security, by which he means securing your API itself, for which you have a number of options available. In his second demo, Toon applied Azure Frontdoor, which includes functionality for a WAF (Web Application Firewall) and functionality for load balancing, so you can call your API from multiple regions.

He changed the API so it only accepts calls from Frontdoor, using an APIM global policy.

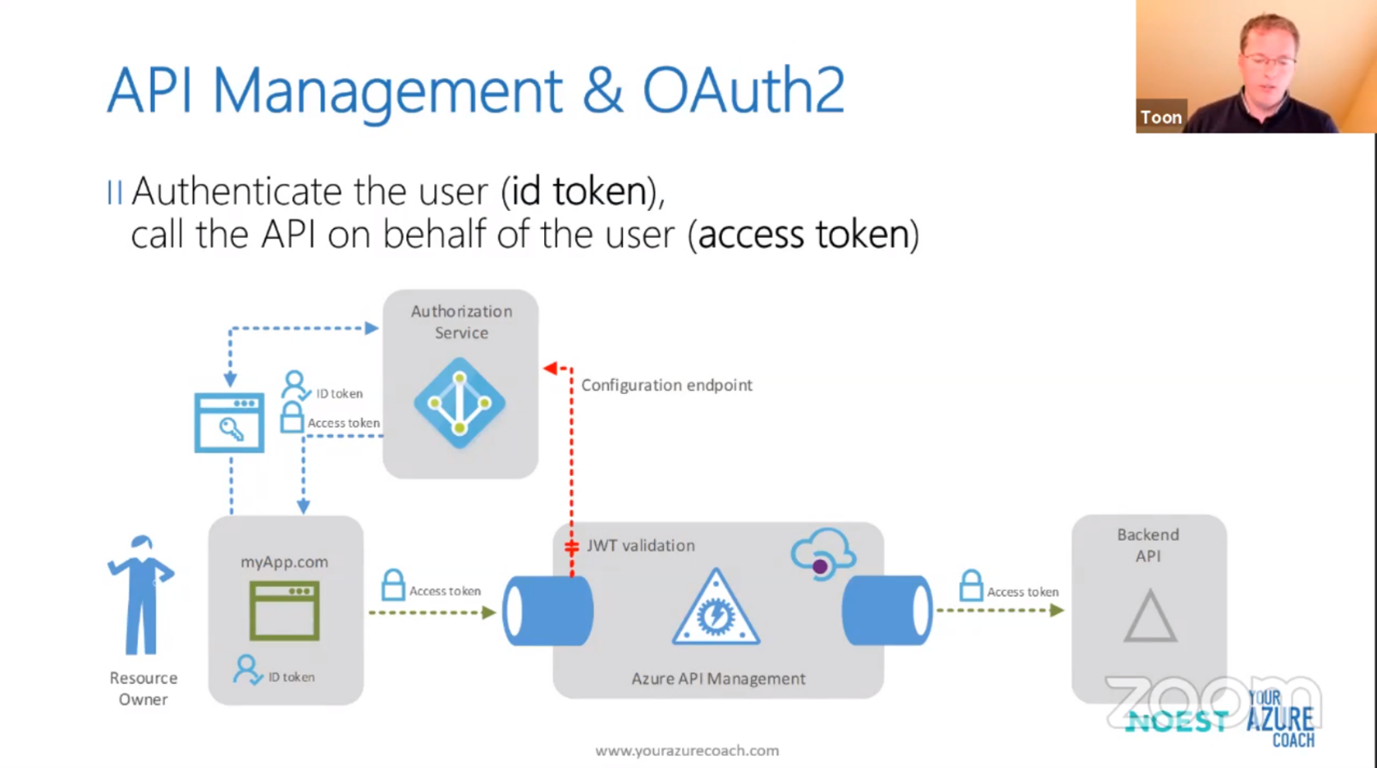

In his next demo, Toon showed how to add Authentication and Authorization to the API. He did this using OAuth2, and showed how to configure the needed Active Directory entries: one for the API and one for the consumer. After that he showed that now when the API is called not only the consumer has to acquire an access token, but also has to give consent before actually being able to process the request.

Demo #4: instead of mocking the response, now the call to the API was forwarded to a Logic App, including passing application and user context to that Logic App. For this, he configured a backend in API management, where all details of the endpoint of the Logic App are stored. That way, the API only needs to refer to that backend.

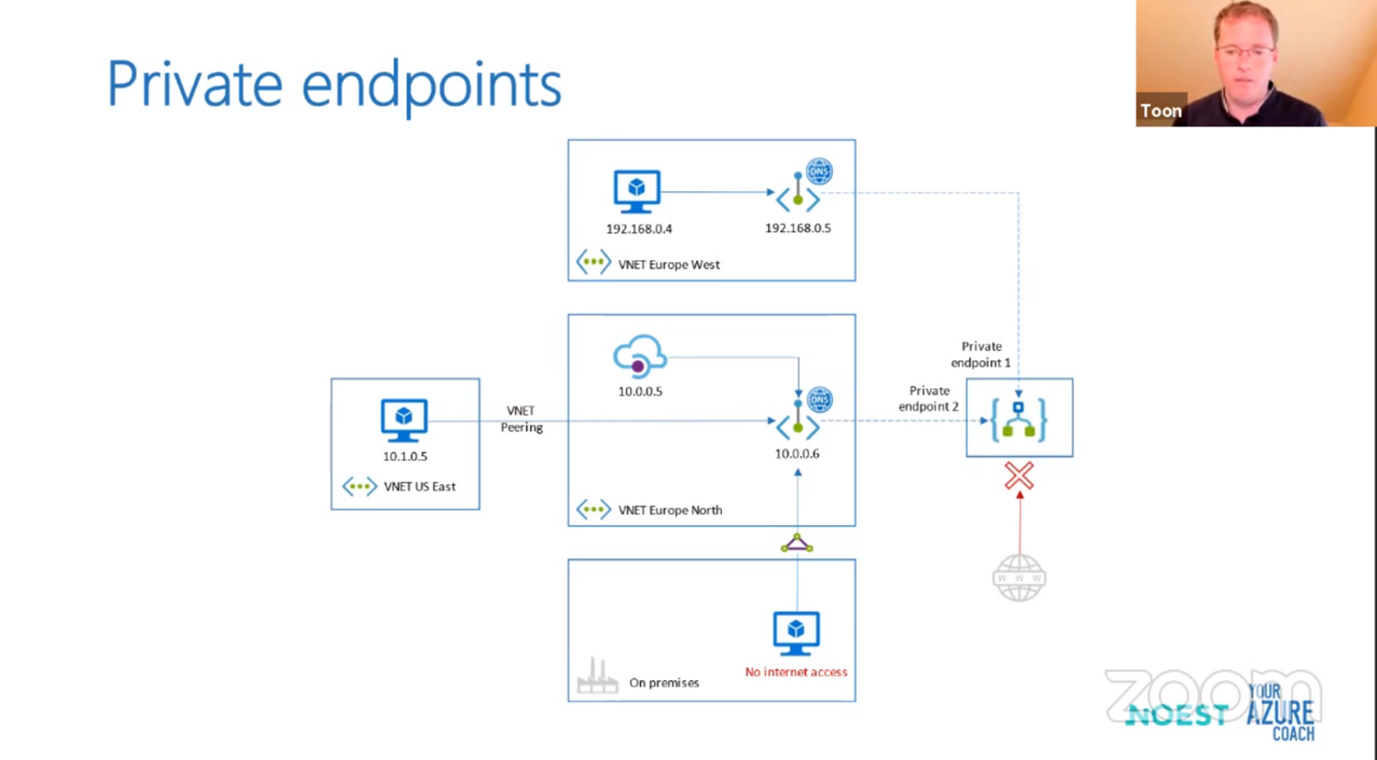

Then Toon talked about Backdoor security: making sure your backend API cannot be called directly, but only through the API. For this you again have several options, including using a Virtual Network. In the next demo, Toon showed that for Standard Logic Apps you can configure a Private Endpoint (during the Q&A session afterwards he added that this option is only available for Standard Logic Apps, not for the ‘old’ Consumption Plan Logic Apps, and only for APIM Premium).

In the final demo Toon showed how to add Authorization and Authentication to your backend API, while stressing that this is the responsibility of that backend API. He showed how you can secure a backend using a Named Value that is stored in Azure Key Vault.

He wrapped up his session by showing this overview, and stressing that if you want to really secure your API, you should make sure you tick all the right boxes!

A very nice session with demos in which Toon very clearly explained the mechanics of all the steps to take, but alas not a lot of shocking new revelations, except perhaps the use of Private Endpoint for Standard Logic Apps, which I found particularly interesting.

Enterprise Integration With Logic Apps

Session showcasing latest Enterprise Integration capabilities, including monitoring, SAP integration, and sharing plans and roadmap, by Divya Swarnkar, Senior Program Manager at Microsoft.

From day 1 the mission is to provide an application platform that enables everyone to be a developer. We provide a low-code/no-code platform that enables you to innovate faster, optimizing operations and management of applications. Our common goal is to transform your business and meet the ever-growing and changing customer needs, staying ahead of the competition.

In a non Logic App world, if you had to build an application that connects to multiple services, you would have to start from scratch, every service connection would mean becoming familiar with APIs and SDKs. The next step would be to figure out the authentication required, creation and registration of apps, token management. Effort proportional to the number of services to be used. With this entire process we haven’t even started to solve business problems.

With Logic Apps this is a completely different story. You can start fast with 450+ connectors that share multiple industry verticals and scenarios (B2B, Financial payments, ERPs, Healthcare, etc.) with the confidence that regardless of the number of services the time to integrate to these is nearly constant. And in the event there is not an out of the box connector, we provide a simple and intuitive way to create your own custom connectors.

What’s new:

- Managed identity has been added to more and more connectors.

- New MSI support for connectors to Azure Services: Servic Bus, Event Hub, SQL

- Enterprise Integration Platform tools for VS2019, including visual mapper to create xslt based transform and schemas for file processing.

- Standard Logic Apps comes with a new connector model built into the runtime that provides better performance and more control.

- SAP connectors: new capabilities like support IDOC flatfile, plain Xml, Stateful RFCs, Transactions in RFC, Certificate support in ISE

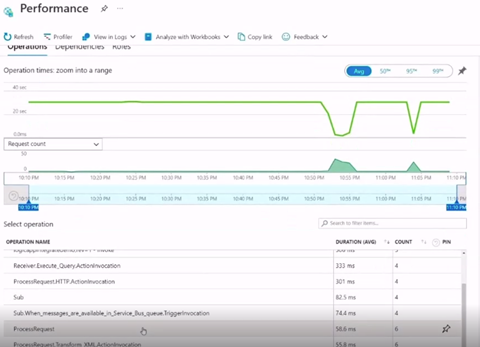

The first demo displayed the new options of an XML transform in VS Code: Artifacts (Maps, Schemas) can now be used without the need for an Integration Account. Also, Application Insights offers a map visual where you can see at a glance the topology, components, external dependencies in action and investigate performance, deep analysis of the performance characteristics.

The second demo displayed the Private preview of OData entities and improvements to SAP connectors, its usability and support of advanced scenarios. As SAP actions having grown substantially, the effort has been made to categorize and improve discoverability. SAP Stateful BAPIs have been around for quite some time, which allows you to group them together as a logical unit of work. The same concept now applies to multiple RFCs, allowing you to run the process in a stateful manner and as part of a transaction. Another common SAP task is to verify sent data has been successfully recorded in SAP tables. In most cases, there used to be a dependency on SAP access or expertise to figure out if things were working as expected end to end. By allowing action to check the status of the data and transactions sent to the SAP system this process is now simplified.

What you should do and should not do while migrating your solutions to BizTalk Server 2020?

Migrating your solutions to BizTalk Server 2020 from a previous version is a challenge that many organizations face these days. In this session Sandro Pereira talked about the dos and don’ts and the challenges of migrating an environment to Biztalk Server 2020, a topic which will be coming back in the new book “Migrating to BizTalk Server 2020” later this year. He also talked about the option of migrating such an environment to Azure instead.

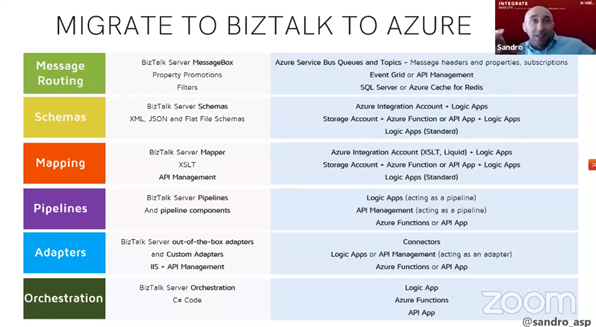

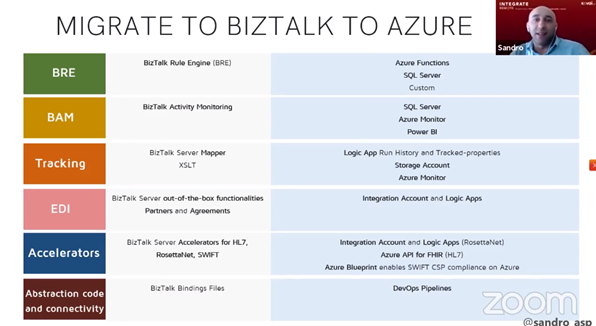

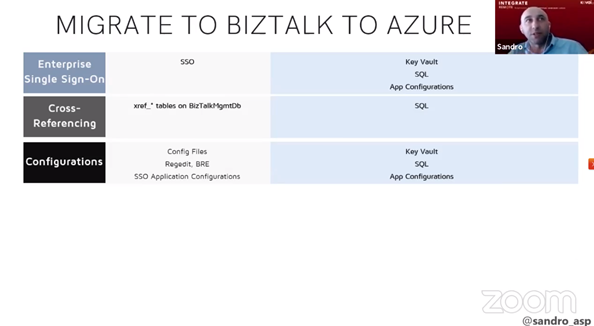

Migrate from BizTalk to Azure

When migrating from a BizTalk environment to Azure, it’s not just a simple question of translating BizTalk artifacts to Azure services, because there are a lot of options.

Some guidance can be found comparing the logical components of an integration solution listed as Biztalk artifacts or Azure components and services. Sandro reminded us that there is no magic formula, as the design of the new environment will be guided by functionality, costs and of course the client requirements and preferences.

Migrate to Biztalk Sever 2020

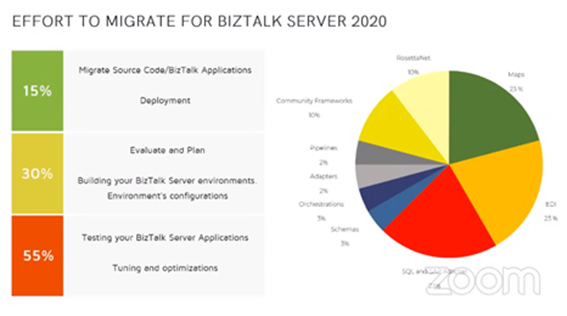

For the main topic of the session, Sandro focused on the migration of a BizTalk environment to BizTalk Server 2020 side-by-side. He also shared with us the estimates and statistics on the effort required to migrate to BizTalk Server 2020.

Some rules to follow for efficient migrations:

- Evaluate, Plan and Implement – Evaluation and planning before implementation can spare a lot of headaches and double work later on, so this is a very important step!

- Application Migration Order – The migration can be executed in order based on the complexity, size or compatibility of the environments

- Migrate The Solution As-Is – The advice is to always try to move the solutions as-is for all non-breaking features. Breaking features such as changes in mappings or deprecated adapter will still have to be fixed, but migrating as-is will reduce the chance of introducing errors when attempting to improve on what previously worked.

The steps to migrate the solution code are pretty straightforward for a simple solution: just copy the solution to the new environment and open it with the new version of Visual Studio. Visual Studio will do a lot of the work for you, then fix the .NET framework and the deployment properties, fix/validate the strong name key, build and deploy.

More complex solutions will require some extra work:

- Transformations using XSLT mapping will need to be checked

- There are some limitation of string concatenations in an orchestration (for example, the build will fail if you concatenated more than four string inside an expression shape)

- Deprecated adapters have been removed from BizTalk Server 2020, so you might have to fix the solutions that were using the old adapters

Furthermore, migrating from an enviroment older than the BizTalk Server 2010 version is an additional challenge, as you will need a “jumping” environment. In that case, you will first migrate the code to the 2010 version and only then to the 2020 version.

Last but not least, there are some difficult parts:

- Migrating the BAM (Biztalk Activity Monitoring) data – This is technically possible, but it is advisable to test all the process before it is perfomed in the PROD database.

- Cross-Reference databases – The standard way is to build XML files with all data using CLT

- Migrating RosettaNet trading partner agreements: You will need to manually create all the process configurations, oganizations and agreements again in the new environment

Sandro spared some time in his session to talk about in-place migrations, mostly to warn us of the risks. Such migrations are a Big-Bang and are usually considered to be too risky if applied directly to a Production environment. For starters, the existing Biztalk environment needs to be down to perform this migration, which might mean more down-time than the business is accostumed to. Also, the underlying .NET version and other related software must all be supported by the current platform and by BizTalk Server 2020. As a result, there are limited rollback options in case of disaster.

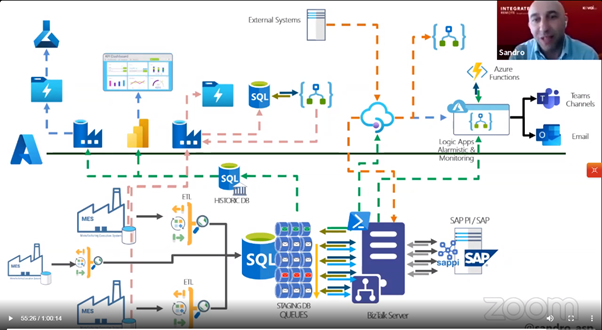

Sandro ended the session with an example of one of the project which is being integrated with the BizTalk server, MES and Azure services( Integration).

Integration Modernization

The last session of the first day is given by Jon Fancey (Principal Group PM), Bec Lyons (Program Manager) and Chris Houser (Principal Program Manager) about modernizing your integration environment.

Jon started off by giving some reasons why you should modernize your environment and several paths you can take: Lift & Shift, Modernize or rewrite your applications.

Next up was Bec explaining that integration is evolving from a single point of connectivity for your on-premise applications to also include Mobile and SaaS applications. Many of the old monolithic applications are also being migrated to the cloud, ingesting data from IoT and big data as well, your integrations need to be adapted for this paradigm change. A modern integration will consist of various parts of the Azure Integration Services: Logic Apps, Functions, Data Factory, Service Bus, Event Grid and API Management. These are all individually scalable and extensible, allowing you to take full advantage of the cloud.

Modernizing your applications to the cloud allows you to be more agile (faster time to market), scale easier (keep total cost of ownership under control), secure your services with modern methods (Azure Sentinel, Azure AAD), innovate (keep up with latest changes and protocols).

Following Bec was Chris, responsible for the Host Integration Server, giving an overview on how you can modernize your mainframe integrations. He talked about the current and upcoming IBM mainframe connectors. The IBM 3270 connector is currently available for consumption-based LA, and the IBM MQ connector is GA for the new Logic Apps Standard runtime. Chris then went on to demonstrate the MQ connector in the new runtime. The upcoming connectors include IBM Host File, IBM DB2, IBM IMS DB, IBM CICS.

Afterward Jon took over once again talking about how to modernize your BizTalk Server. Focusing on the BizTalk Migration Tool, created to speed up your migration from BizTalk to Azure Integration Services, the tool works from all versions from 2010. He guided us through the capabilities of the tool and how it works, ending up with the roadmap for the tool: support for LA Standard, WCF / SAP adapters, performance improvements and BRE.

Finally, the session ended with Bec asking the audience some questions about their current environment and setup, and whether or not they were already working on their modernization journey.

Subscribe to our RSS feed