Image sourced from Microsoft

In this article, we will briefly discuss why this innovation was needed, the challenges posed by the existing analytical services and how Fabric aims to be the solution, and a brief overview of its design, its objectives, and its benefits.

Why is there a need for change?

Despite Microsoft’s robust suite of analytical services for a complete platform, it poses the following challenges:

- Varied Products & Experiences

- Proprietary & Open Systems

- Mix of Dedicated & Serverless

- Diverse Business Models

- Steep Learning Curve

- Expertise & Integration Demands

Fabric addresses these challenges head-on, offering a unified and streamlined analytics solution that spans the spectrum of data integration, engineering, warehousing, real-time analytics, and data science.

Mission

Fabric is not merely a tool; it’s a strategic move by Microsoft to revolutionize the analytics landscape. The main goals of the new SaaS Service include:

- Offering customers a price performant, easy-to-manage, complete modern analytics solution.

- Acting as an enterprise tool empowering data value creation for all, from experts to non-technical users.

- Streamlining and unifying the User Experience, in comparison to fragmented products.

- Enabling persistent data governance and one security across workloads.

- Offering a single capacity pricing model.

- Providing AI driven features like Copilot across workloads.

Design

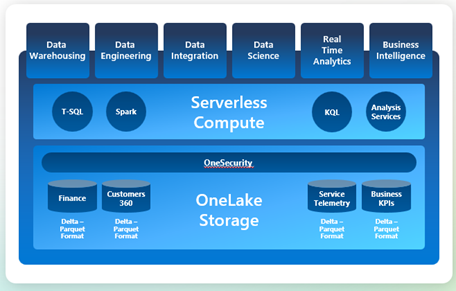

The heart of Fabric lies in its design. It seamlessly integrates Microsoft products across the analytics journey, from Data Integration and Engineering to Data Warehousing/Real-Time Analytics and Data Science, ultimately culminating in a unified Power BI experience. The cohesive platform ensures a smooth and effortless connection between each stage, enhancing overall efficiency and collaboration.

- Storage Layer:

- OneLake is the storage layer of Fabric. It is a single SaaS lake that is auto-provisioned, and all stages/workloads of Fabric read and write to this single OneLake.

- Auto-indexing of data in OneLake allows for easy discovery, sharing, governance, and compliance.

- All tabular data is stored in a single common format(open standards): Delta-Parquet.

- Compute Layers:

- All compute engines like Sql, Spark, KQL, and Analysis Services store their data automatically in OneLake.

- All compute engines have direct access to OneLake data without requiring any additional imports.

- Access Control:

- Control Plane: Users’ permissions are defined by one of four workspace membership types: admin, member, contributor, and viewer.

- Data Plane: A Shared universal security model is enforced on top of OneLake, to ensure data is accessible only to users with appropriate privileges. This security model is enforced across all compute engines.

- Shortcuts:

- Shortcuts are objects in OneLake that point to other storage locations that can be internal or external to OneLake. These are independent objects from the target and if you delete a shortcut, the target remains unaffected. If you move, rename, or delete a target path, the shortcut can break.

Advantages to using Fabric

Now we have a solid understanding of Fabric’s design, we can shift our focus to the tangible benefits it brings.

- Embrace Collaboration: In today’s data-driven landscape, collaboration is key to discovering insights. Departments must share data seamlessly; obstacles to data sharing hinder effective collaboration.

- Enable Data Integrity/Single Source of Truth: Information is frequently housed in separate databases, causing discrepancies across departments. As data ages, its accuracy diminishes and its usefulness is reduced. OneLake facilitates centralizing data to address these issues.

- Enterprise-wide Data Discovery: Fabric enables sharing of data between departments, which helps in understanding new opportunities and identifying enterprise-wide inefficiencies.

- Standardize Tools and Formats: Fabric ensures all departments/teams across the enterprise use the same analytics tools and data format.

- Efficient Resource Utilization: Fabric avoids copying the same dataset or downloading it for analysis via excel, thus reducing unnecessary resource usages.

- Decentralized data teams: Traditional data architectures revolve around data warehouses or data lakes, serving as a central repository for all organizational data. A dedicated data team is responsible for managing this centralized structure and fulfilling data requests from various business teams.

- Modern organizations are now shifting to operating with multiple domains, each focusing on specific areas of growth. These domains rely on the central data team to access the required data, which lacks scalability and significantly increases the time-to-insight.

- In Microsoft Fabric, each business team can have its own workspace containing the data as well as the business logic. Each one of these workspaces can be assigned to one domain. Every domain has admins and contributors, among whom we can find a Data Owner and one or multiple data engineers.

- Democratize reports creation: Users can be emowered to create and deliver actionable insights through their preferred applications.

- Federated Governance: As a company grows, it becomes increasingly difficult for central IT teams to manage the security of data at the most granular level. Using Fabric, the central IT team sets automated guidelines and frameworks while the business handles aspects such as data quality and privacy in their own areas.

- One Security

Fabric or Azure Individual Analytics Services?

The decision to migrate is not a one-size-fits-all scenario. Decision-makers grappling with the choice between Fabric and individual Azure analytics services must consider:

- PaaS Synapse Lifespan: Microsoft has announced that there are no current plans to retire Azure Synapse Analytics and if such a plan arises, advance notice would be provided and commitments would be supported. Existing Synapse solutions will keep working.

- Data Maturity Level/ Data Goals of the Organization: If your organization is just starting to collect data and no complex analytics or reporting is done on top of the data, then just choosing Synapse to run a couple of spark jobs would be more than sufficient. If your organization is in the middle of a data journey or is walking a data-driven path, then Fabric would be the choice.

- Data Volume/Organization Size/Data Sharing: For small or SMEs where there are few business analysts working close to the data team, or if there are no separate domains/teams collaborating on data, sticking with separate analytical services should be sufficient.

- Analytics Products that are currently used: Customers who use data factory or equivalent for data movement but nothing more should stick to Synapse unless a wider analytical need arises.

- Existing Product Commitment: Customers with a commitment to existing tools (e.g. a product for ML) for the next few years, but who only use Synapse for data Engineering, may want to stick with Synapse.

- Fabric Maturity Level: As it’s built on top of existing Microsoft analytics products, Fabric is quite mature. But as a new service, it is wise to evaluate it further to see if all features that are required for existing Synapse projects are already GA in Fabric.

- SaaS vs PaaS choice: Typical SaaS vs PaaS where PaaS offers more control but high maintenance.

- Migration Tools: Microsoft is busy creating migration tools from existing Analytics Services to Fabric. Currently, Synapse DW, Synapse Spark, Synapse Data Explorer, and Azure Data Explorer to Fabric migration guides are all available. Migration guides for services like Azure Data Factory are a work in progress and their status can be found in Fabric Community updates.

So, should your organization make the move to Fabric?

Whether to choose Fabric or not is a decision that will vary, depending on your organization and its priorities. But considering Fabric’s current feature set, here is how we see it within our projects. This of course will change as Fabric evolves.

Greenfield Projects: Good to start with Fabric. The only factor to evaluate is the cost aspect.

Existing Analytics Projects: After considering the key factors above, if benefits are seen then migrate to Fabric, if not stick to the current. If there is no immediate need to migrate to Fabric, it is worth waiting for 6-month time as a lot of new features are being added now.

Conclusion

In conclusion, this article serves as a comprehensive guide to Microsoft Fabric, offering insights into its design, advantages, and key considerations for migration. Whether you are embarking on a new analytics venture or contemplating a shift from existing services, this blog post aims to empower decision-makers with the knowledge needed to navigate the transformative landscape of Microsoft Fabric.

Subscribe to our RSS feed