Having incorrect dates will screw up your data. Not knowing the point in time at which a data observation was recorded will render any historical and time-series analysis useless. Hence, we at Codit spend significant time making sure that the definition, serialization and interpretation of time is correct from the very beginning of the IoT value chain. The following are some basic principles for achieving this.

Add a gateway timestamp to all data observations

In general, we assume that data observations generated by machines will be accompanied by a timestamp generated by the originating machine. This is generally true. However, we have noted that the clocks of machines cannot be trusted. This is because, in general, operators of equipment place little importance to the correctness of a machine’s internal clock. Typically, machines do not need to have precise clocks to deliver the function they were designed for. We have seen machines in the field transmit dates with the wrong time offset, the wrong day, and even the wrong year. Furthermore, most machines are not connected to networks outside their operational environment, meaning they have no access to an NTP server to reliably synchronize their clocks.

If you connect your machines to the Internet through a field gateway, we highly recommend you to add a receivedInGateway timestamp upon receiving a data point at the gateway. Gateways have to be connected to the Internet, they have access to NTP clocks and can generally provide reliable DateTime timestamps.

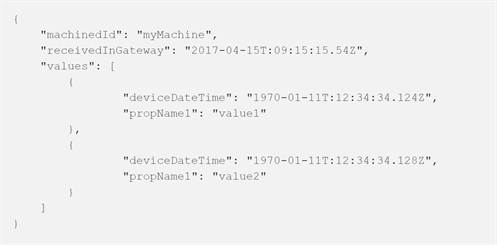

A gateway timestamp can even allow you to rescue high-resolution observations that are plagued by a machine with an incorrect clock. Suppose, for example, that you get the following data in your cloud backend:

You can see that the originating machine’s clock is wrong. You can also see that the datetime stamps are being sent with sub-second precision. You cannot trust the sub-second precision at the “receivedInGateway” value because of network latency. However, you can safely assume the sub-second precision at the machine is correct, and you can use the gateway’s timestamp to correct the wrong datetimes for high-precision analysis (in this case, the .128 and .124 sub-second measurements).

Enforce a consiste DateTime serialization format

Dates can become very complicated very quickly. Take a look at the following datetime representations:

- 2017–04–15T11:40:00Z: follows ISO8601 serialization format

- Sat Apr 15 2017 13:40:00 GMT+0200 (W. Europe Daylight Time): typical way dates are serialized in the web

- 04/15/2017 11:40:00: date serialization in American culture

- 15/04/2017 13:40:00GMT+0200

All of these dates represent the same point in time. However, if you get a mixture of these representations in your data set, your data scientists will probably spend a significant amount of hours cleaning the datetime mess inside your data set.

We recommend our customers to standardize their datetime representations using the ISO8601 standard:

YYYY-MM-DDTHH:mm:ss.sssZ

This is probably the only datetime format that the web has defined as de facto, and is even documented by the ECMA Script body HERE.

Note the “Z” at the end of the string. We recommend customer to always transmit their dates in Zulu time. This is because analytics is done easier when you can assume that all time points belong to the same time offset. If that were not the case, your data team will have to write routines to normalize the dates in the data set. Furthermore, Zulu time does not suffer from time jumping scenarios for geographies that switch summer time on and off during the year.

(By the way, for those of you wondering, Zulu time, GMT and UTC time are, for practical purposes, the same thing. Also, none of them observe daylight saving changes).

At the very least, if they don’t want to use UTC time, we ask customers to add a correct time offset to their timestamps:

2017-04-15T13:40:00+02:00

However, in the field, we typically find timestamps with no time offset, like this:

2017-04-15T13:40:00

The problem with datetimes without a time offset is that, by definition, they have to be interpreted as local time. This is relatively easy to manage when working on a client/server application, where you can use the local system time (PC or Server). However, since a lot of IoT is related to analytics, it will be close to impossible to determine the correct point of time of a data observation whose timestamp does not include a time offset.

Make sure that your toolset supports the DateTime serialization format

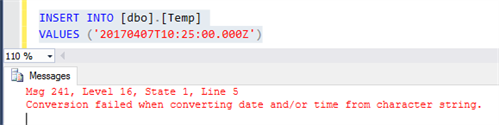

This might sound trivial, but sometimes you do find quirky implementations of the ISO8601 among software vendors. For instance, as of this writing, Microsoft Azure SQL Server partially supports ISO8601 as serialization format for DateTime2 types. However, this applies only to the ISO8601 literal format. The compact format of ISO8601 is not supported by SQL Server. So if you do depend on SQL for your analytics and storage, make sure you don’t standardize on ISO8601 compact form.

Conclusion

Dates are easy for humans to interpret, but they can be quite complex to deal with in computer systems. Don’t let the trivialness of dates (from a human perspective) fool you into underestimating the importance of defining proper DateTime standardized practices. In summary:

- Machine clocks cannot be trusted. If you are using a field gateway, make sure you add a gateway timestamp.

- Standardize on a commonly-understood datetime serialization format, such as the ISO8601

- Make sure your date serialization includes a time offset.

- Prefer to work with Zulu/UTC/GMT times instead of local times.

- Ensure your end-to-end tooling supports the datetime serialization format you have selected.

Subscribe to our RSS feed