The most common way of making use of such AI models is through chatbots. Interactions with such chatbots are possible through the use of prompts. Various platforms provide access to these AI models, each offering unique features and capabilities.

At Codit, we extensively utilize Microsoft’s Azure cloud computing platform for our daily work tasks. Therefore, a suitable option for interacting with generative AI would be to use Microsoft’s own Azure OpenAI Service. Azure OpenAI Service provides REST API access to OpenAI’s powerful language models, which include GPT-4o, GPT-4 Turbo with Vision, GPT-4, GPT-3.5-Turbo, and Embeddings model series. These models can be easily adapted to specific tasks including but not limited to content generation, summarization, image understanding, semantic search, and natural language-to-code translation. Users can access the service through REST APIs, Python SDK, or through a web-based interface using Azure OpenAI Studio.

Conversational AI models allow users to engage in natural language conversations by simply typing prompts. A prompt is a specific input or instruction given to an AI-based conversational agent to elicit a particular response or action. It is typically crafted to guide the chatbot in generating relevant, coherent, and contextually appropriate replies. Prompts can range from simple questions to complex statements or scenarios.

Prompt effectiveness depends on how well users frame the desired outcome and provide a necessary context. This is known as Prompt Engineering. This blog post delves into the world of prompt engineering, which reveals how carefully crafting input prompts enhances the output of conversational AI, transforming it into a flexible tool in the life of a modern-day developer. Various principles and techniques of prompt engineering will be explored, showcasing creative methods to customize conversational AI’s capabilities to specific instructions in order to acquire the most relevant response.

The Iterative Approach ♻️

When striving to achieve desired results through prompts, your initial attempt might not always be successful. Achieving precise outcomes tailored to your specific needs often requires a process of trial and error. Therefore, adopting an iterative approach is crucial, involving the refinement of prompts based on feedback from the model.

The Step-by-Step approach:

- Begin with a Prompt: Start by providing a prompt to guide the model.

- Evaluate Performance: Analyse where the model’s response fell short and determine the reasons.

- Clarify Instructions and Allow Time: Refine your instructions for clarity and consider allowing the model additional processing time.

- Enhance with Examples: Improve your prompts by including examples to guide the model towards better responses.

Remember:

- Keep prompts clear and specific:

- Ensure prompts are concise and precise to achieve better results.

- Identify issues:

- Carefully examine the results to understand why they may not meet your expectations.

- Refine your prompts:

- Continuously improve your prompts based on insights gained.

- Repeat the process:

- Recognize that this is a cyclical process, and repetition is essential for success.

Things to bear in mind while prompting

Hallucinations

In the world of conversational AI models, the term “hallucinations” refers to erroneous, false, or inaccurate responses resulting from a model’s learning process. These occasionally arise from the model being trained on incomplete or biased data, limitations in training, gaps in knowledge, and looping in the same input content. Users can minimize hallucinations by critically assessing responses, providing clear prompts, and refining iteratively.

Limitations

Conversational AI models, while advanced, face several limitations. They struggle with maintaining context, understanding nuance, and handling complex problem-solving. Their knowledge can be static and sometimes inaccurate, and they often lack true creativity and emotional intelligence. Ethical concerns, such as bias and data privacy, are significant, as is the risk of misuse. These models depend heavily on the quality of their training data and require substantial computational resources. Additionally, platforms may not provide models with the capability to search the internet, decreasing the chances of receiving up-to-date information from the model.

Now, let us proceed to some practical details regarding the creation of effective prompts (please note that while the example prompts and responses were performed using ChatGPT, these principles can be applied to any conversational AI agent):

Prompting Tips

Tip 1: Be Clear and Specific

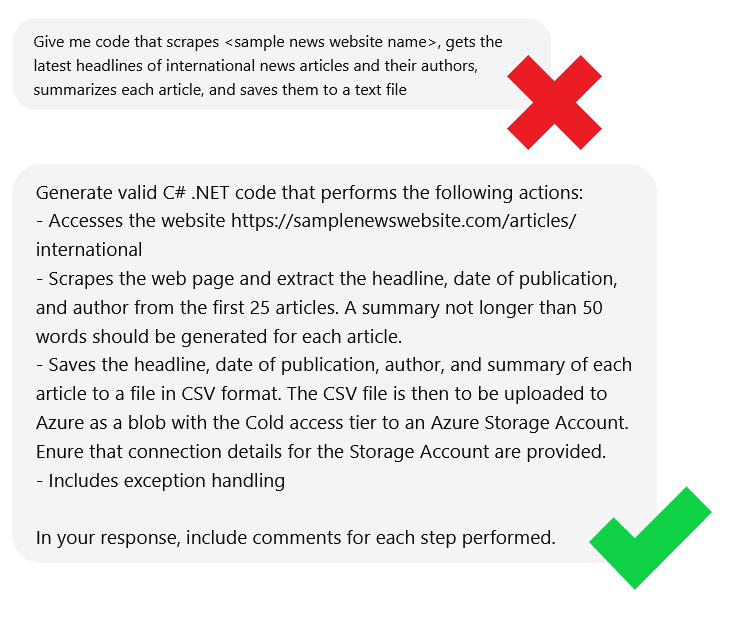

Create instructions that are both explicit and precise. The more specific and detailed your instructions, the lower the chances of receiving irrelevant or inaccurate responses. In general, opt for longer prompts that provide additional clarity and context to guide the model toward the desired output.

The following techniques will be of aid to enhance the clarity of your instructions:

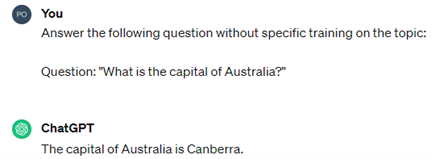

Technique 1: Write instructions that are as clear, specific, and explicit as you can possibly make them ✍️

Utilizing this technique reduces the chances of getting irrelevant or incorrect answers for your prompt. A longer prompt is often better – it offers more clarity to the model.

Try to use trigger phrases such as ‘Generate’, ‘Give me a step-by-step guide’, or ‘Summarise’ when beginning your prompt:

Technique 2: Utilize Delimiters (Punctuation, Language Tags)

Utilize delimiters, such as punctuation or language tags, to delineate separate sections within your prompt. This aids the model in identifying which text it should prioritize.

Caution should be exercised to prevent Prompt Injection. Prompt injection is a type of attack where someone inputs carefully crafted instructions to trick an AI model into giving incorrect or harmful responses. This can be done directly by inserting misleading prompts or indirectly by changing the context the model reads. Such attacks pose security risks, can spread misinformation, and undermine the trustworthiness of AI systems. To prevent this, inputs should be carefully checked, the model’s access to information should be controlled, and users should be informed about these risks.

Delimiters like “” or <> or “ etc. are useful in clearly indicating distinct parts of the input, thereby mitigating the risk of Prompt Injection.

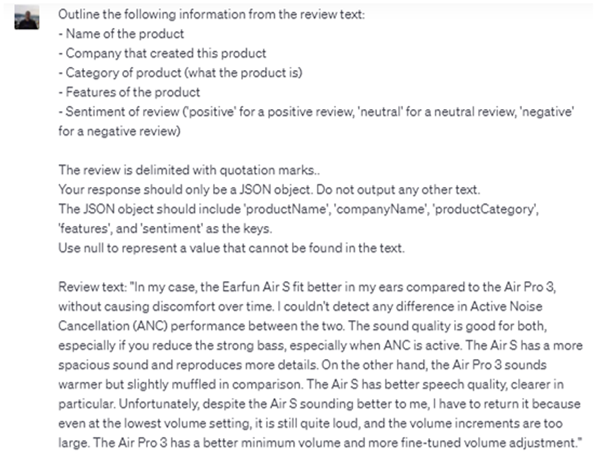

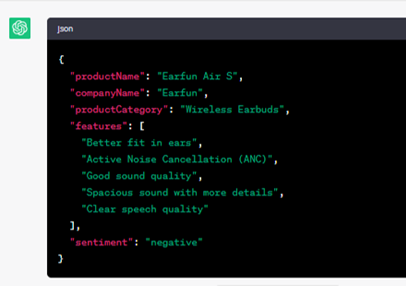

Technique 3: Define the Response Format

Specify the response format required, such as requesting mock data in JSON format and defining JSON object keys. The response format is not confined only to software data structures; one may create custom structures by specifying a schema or format of their own. This versatile approach extends to generating C# XML documentation or OpenAPI summaries for API endpoints, for example.

Let’s see Techniques 2 and 3 in a practical scenario. This example below also makes use of sentiment analysis:

Technique 4: Validate Assumptions ✋

Direct the model to assess assumptions and consider potential edge cases. You can incorporate conditional logic in the language to prompt the model to verify specific conditions. For instance, if the input lacks text with a particular format or necessary information, instruct the model to cease processing the prompt.

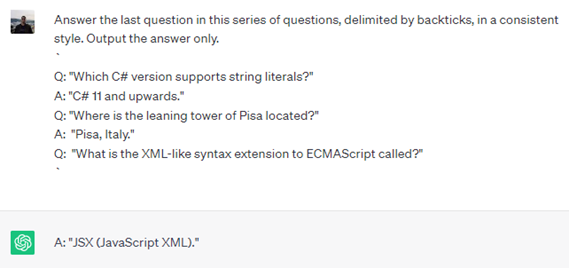

Technique 5: “Few-shot” Prompting 🔫

Contrasting with “zero-shot” learning, where the model successfully tackles an untrained problem, the few-shot prompting technique involves presenting instances of successful task executions before requesting the model to perform the actual task. This approach is adopted to establish a consistent style and convey expectations regarding the desired output.

In the given example, the model consistently provides answers in a similar fashion to previous responses, encapsulated in quotation marks and presented concisely.

Technique 6: Avoid saying what not to do 🙅 say what to do instead ✅

Encouraging specificity in prompts is a key strategy that directs attention to the intricacies essential for receiving high-quality responses. Emphasizing the importance of detailed instructions enhances the likelihood of obtaining accurate and tailored outputs from the model. It is advised to steer clear of framing prompts in the negative, by avoiding the use of “Do not” instructions. Instead, adopt a positive and constructive approach to prompt formulation, specifying what actions or information are desired to guide the model effectively:

Tip 2: Allow Adequate Processing Time ⌛

When engaging with conversational AI models, providing additional details often proves beneficial in attaining desired results. A vague prompt or a prompt containing an unexplained complex task is likely to yield less favorable outcomes.

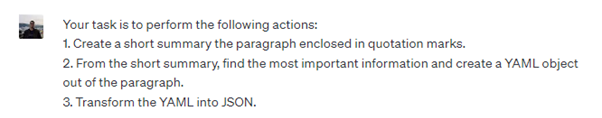

Technique 1: Break Down the Task 📃

Describe the necessary steps for the model to accomplish a task explicitly. Instruct the model to execute a series of actions, outlining the ordered sequence of instructions needed to achieve the desired outcome:

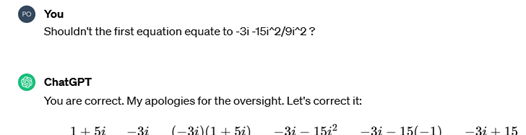

Technique 2: Verify Responses

Confirming the Validity of Solutions to Problems, and the validity of cited References:

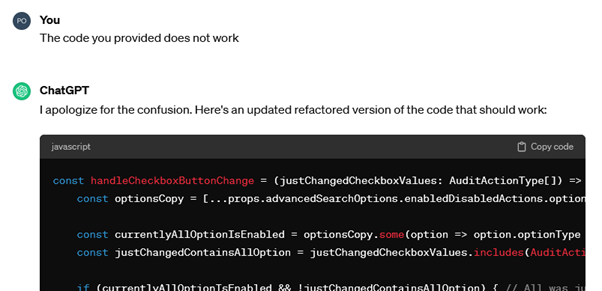

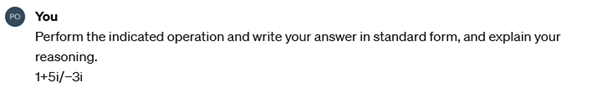

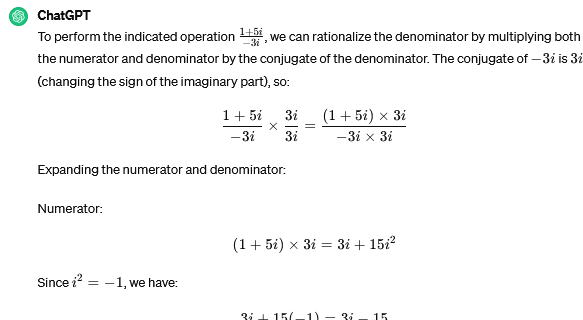

It may be the case that a conversational AI model gives an incorrect solution to a specific problem, and/or gives the incorrect reasoning for such solution, or vice-versa.

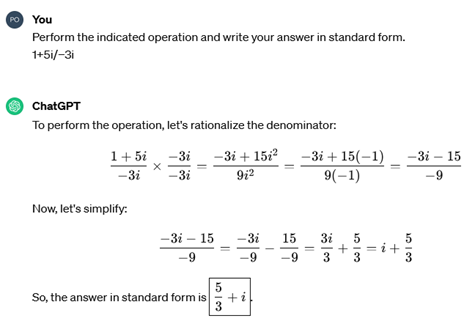

It is clear that in this case, the conversational AI model has made errors in solving this algebra problem. Rather than accepting its answer immediately, it is important to analyse the reasoning behind the solution and identify any mistakes. It is worth noting that conversational AI models are built for generating text and may not consistently provide accurate solutions for complex questions.

When interacting with conversational AI, it is ideal to verify any references mentioned in responses for accuracy. One may request and access these references – which may be in forms such as papers or links to documentation – to confirm the validity of the information. It is important to note that while the model may provide reference links to web pages, these might not always align with the source, especially in the free version. The web pages might not even contain relevant information to back up the response of a given prompt, or downright not exist at all.

Code and Data Validation:

To ensure the reliability of generated code, consider pasting it into your preferred Integrated Development Environment (IDE) and checking for compiler errors as well as logical issues. The generated code may fail to compile altogether or may not execute the intended task or produce the expected output. Similarly, when dealing with data such as JSON, it’s advisable to validate it using an appropriate validator tool. This thorough validation process contributes to the overall accuracy and trustworthiness of the information and code produced by a conversational AI model.

Technique 3: Encourage Independent Problem Solving 🧑🏭

Encourage the model to develop its own solution prior to arriving at a conclusion. Explicitly request the model to engage in independent reasoning and problem-solving, thereby promoting a thoughtful approach rather than precipitate conclusions. Utilize phrases such as “explain your reasoning” or “explain your answer” to facilitate this process. By incorporating one of these phrases and addressing the preceding mathematics problem, the following response is generated, which includes detailed explanations:

Conclusion

I encourage you to experiment with the provided tips and techniques, as I am confident that their application will lead to improved results. By implementing these strategies, you can enhance your outcomes and achieve greater success in your endeavors. These tips will also give you a head start when utilizing Azure OpenAI for personal and professional projects. Take the opportunity to explore these methods first-hand and observe the positive impact they can have on your performance. Thanks for reading! 😊

Interested in talking to us about your AI journey?

Contact Steven

IoT Data & AI Domain Lead - Data & AI Solution Architect